Satisfaction feedback

This chapter will explain what is satisfaction, how to record it, and how to view and use the satisfaction log.

Different Types of Satisfaction

There are many ways to measure user experience. The platform uses these methods to collect user feedback in the conversation and measure the user's recognition of the response content and interaction experience. Then it can find the possible problems of the robot, and adjust and optimize according to the feedback of customers.

Skill Satisfaction is the user's rating of the "response to a single skill". By using surveys like CSAT to ask the comments of user of a specific answer. The user can rate the responses of the agent within the survey range of 1-5.

The agent records the 4 & 5 as "positive", and calculate the formula CSAT=positive record/total record to get the final CSAT score.

Session Satisfaction is the user's rating of the "overall conversation experience" at the end of the session. By using surveys like NPS to ask the comments of user of the agent. The user can rate the agent apperance within the survey range of 1-10.

The agent records 9 & 10 as "Promoters", 7 & 8 as "Passives" and 1 - 6 as "Detractors", subtracting the percentage of Detractors from the percentage of Promoters yields the Net Promoter Score.

Design method of CSAT survey of dialogue agent

Generally speaking, only 2% of customers will take the initiative to complete the questionnaire. Therefore, in order to collect more useful data, agent needs to make feedback interaction design as simple as possible. To enable users to complete a feedback effortlessly can enhance users' willingness to feedback.

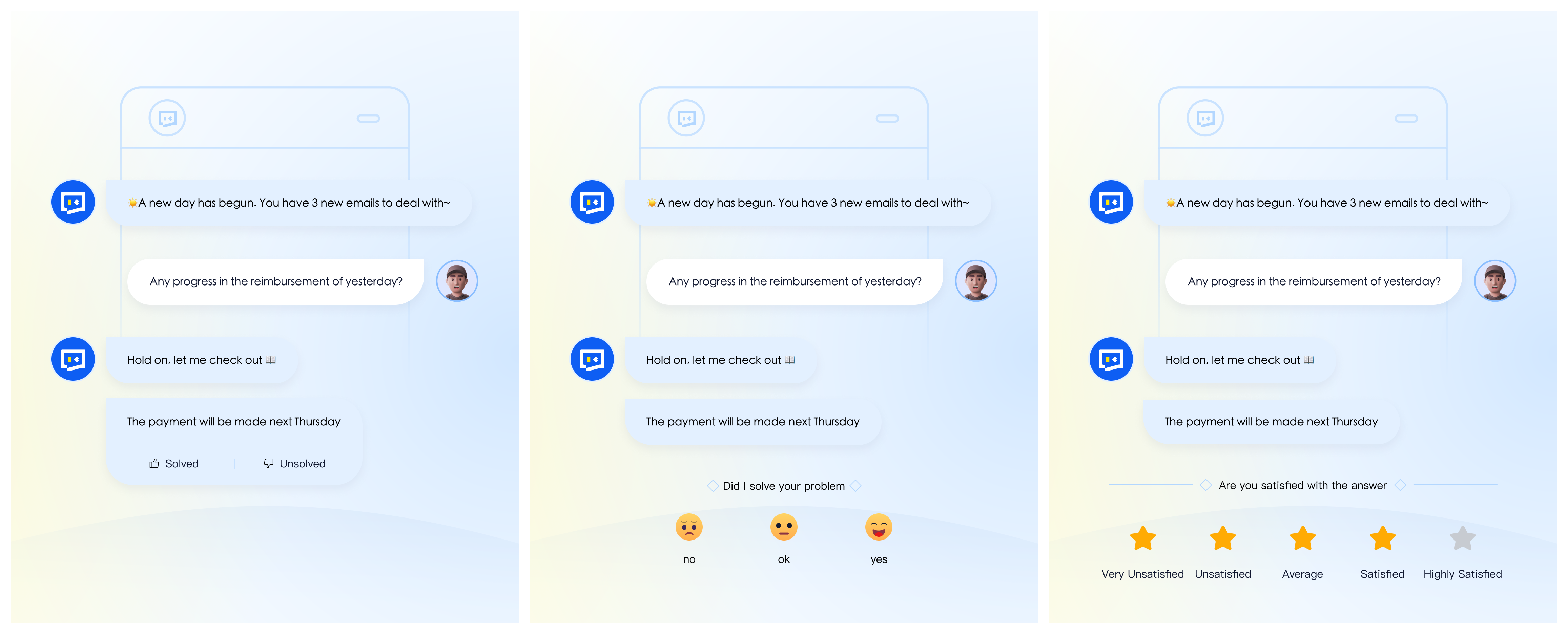

The common satisfaction survey style in the dialogue agent is shown in the figure above. It is not difficult to find that although they all follow the same pattern, that is, ask the user whether they are satisfied, and the user chooses to score. However, due to the different use scenarios and feedback needs of different projects, the survey has also evolved in its formal use.

- Questioning method of the survey: you can rephrase the question in different ways to adapt to the specific business, but try to prompt the user to evaluate the "experience" and "satisfaction",

Sample questions:

- Does this answer help you?

- Did I solved your problem?

- Are you satisfied with my help?

- Evaluation criteria: There is no really unified scoring standard. The common feedback styles are as follows:

- Binary scale - resolved 👍/ Unresolved 👎

- Multiple choice - a series of customized options (wrong answer)

- Likert scale - Very unsatisfied(⭐)- Very satisfied(⭐⭐⭐⭐⭐)

- Open - let customers further elaborate in their own words, often combined with the above options. When users choose negative feedback, add an open feedback entry.

Skill Satisfaction

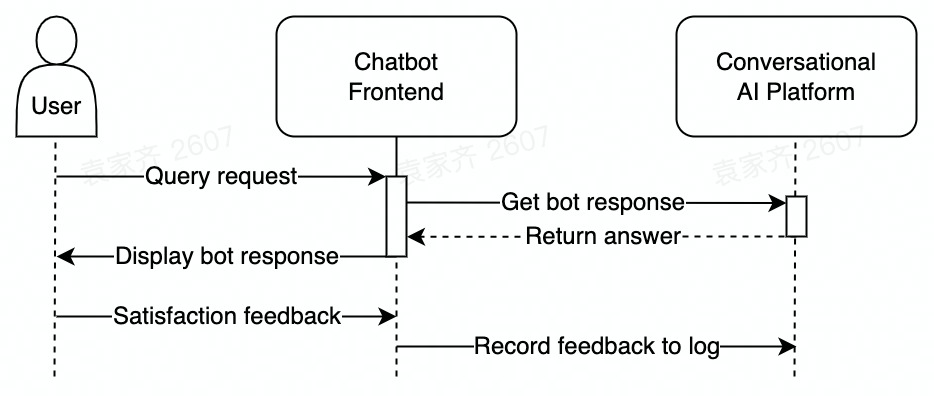

The front page of agent obtains the details of each feedback information from the user, calls the satisfaction evaluation interface provided by the platform, and sends the feedback results to the platform. The platform will collect feedback results and summarize and display them according to skill.

caution

Currently, the platform only supports FAQ satisfaction

API calling

- Call agent's GetReply API, get

replyLogIdfield - Call the SessionSatisfaction API to write the satisfaction evaluation

- Fill in {id} as

replylogidobtained in the previous interface - See interface description for details of interface parameter transmission

- Fill in {id} as

Satisfaction log and analysis

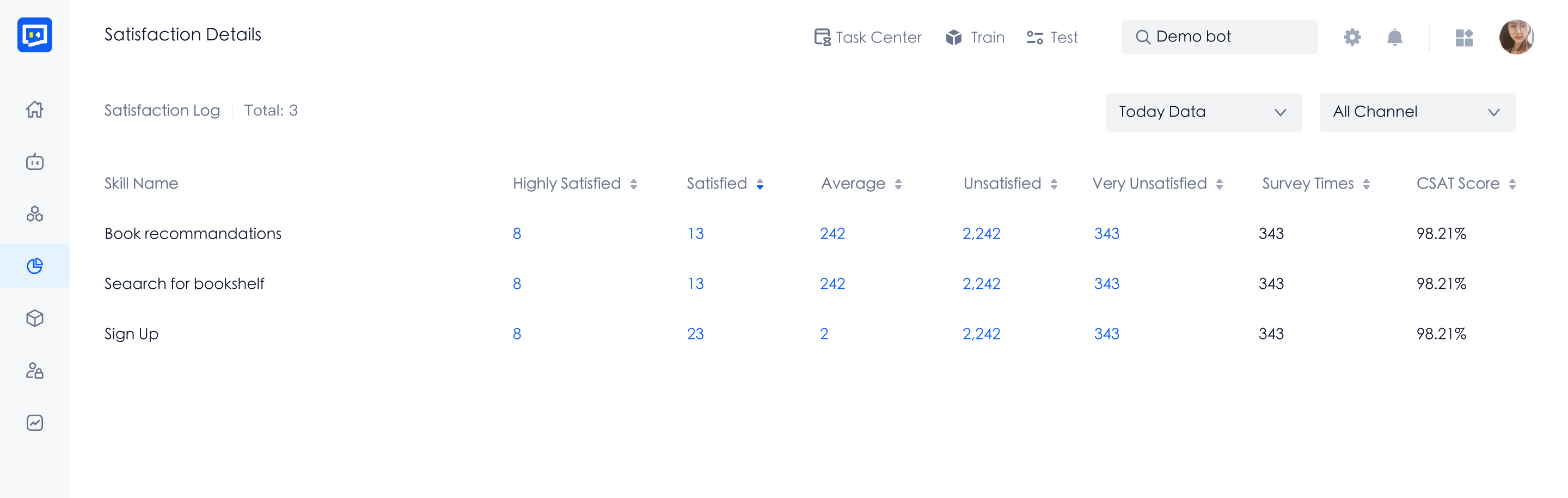

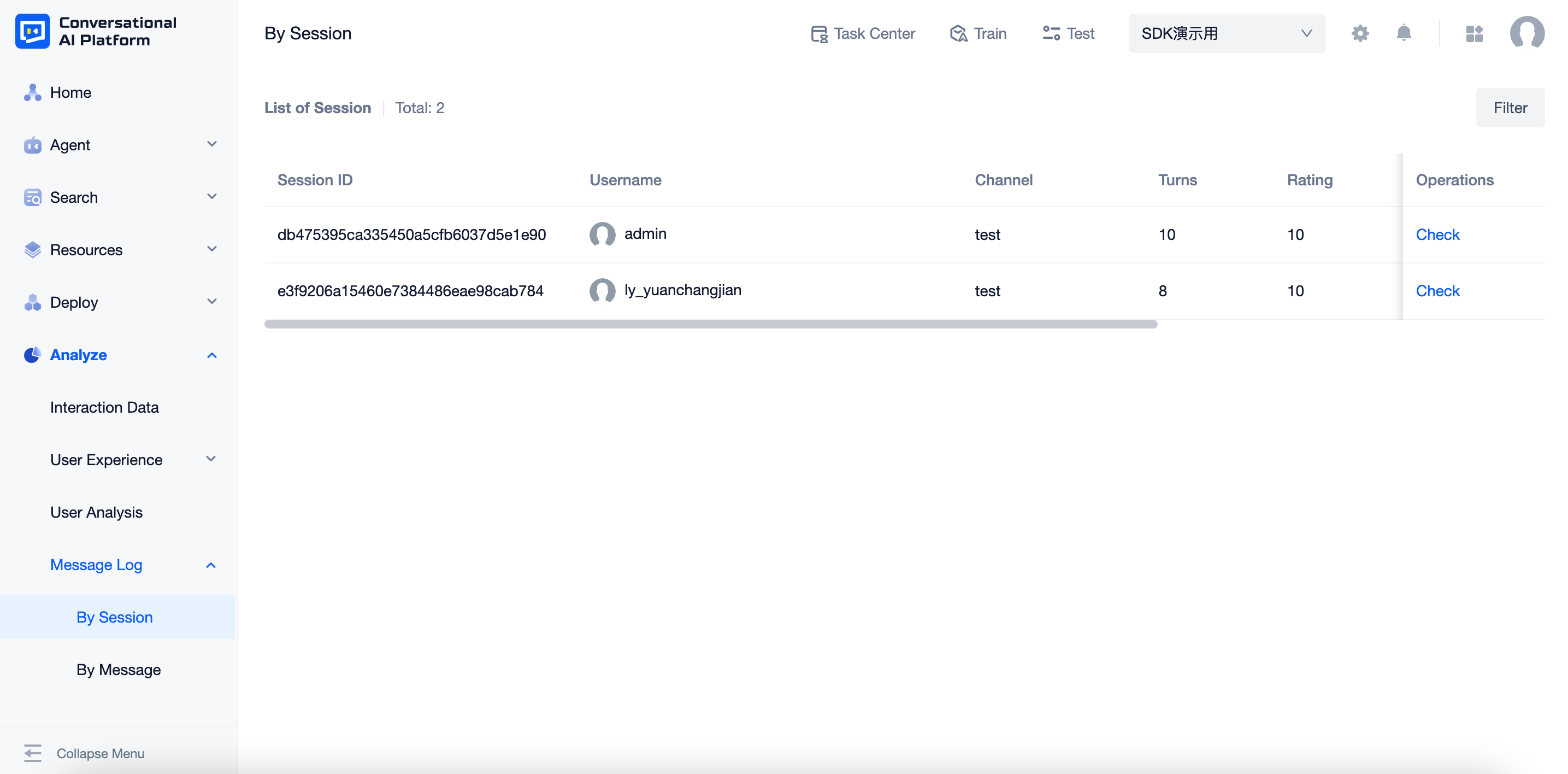

From the menu "monitoring - user experience - satisfaction details", you can enter the satisfaction details page.

The list shows all skill with at least one user feedback data, and supports filtering by time period and specified channel

For each skill, the number of satisfaction feedbacks, the total number of surveys and the CSAT scores of each skill are recorded.

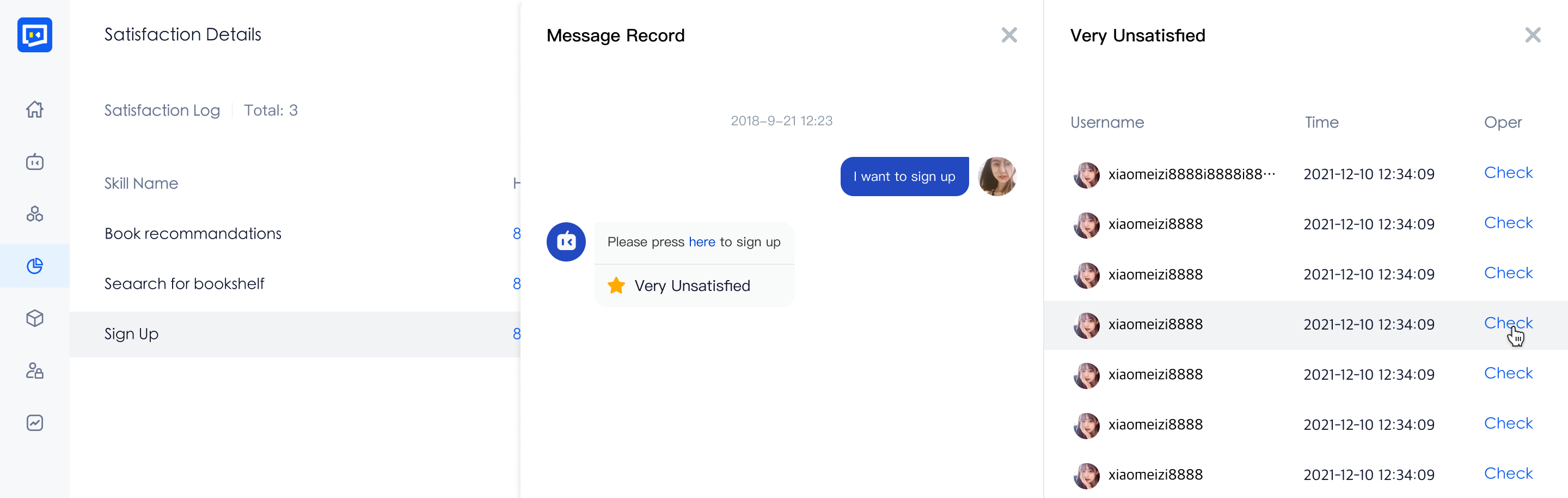

Click the satisfaction feedback item number, and the drawer will display the session log list of all users' feedback on the current skill. The list can view the log details of the corresponding session.

In the log details, the user feedback details are displayed below the corresponding agent answers.

Session Satisfaction

The front page of agent obtains the user feedback at the end of the session, calls the SessionSatisfaction API provided by the platform, and sends the feedback results to the platform. The platform will display it in the session log.

API calling

- Call agent's GetReply API, get

sessionExpirationTimeandsessionIdfield - When the time interval since the end of the last conversation exceeds

sessionExpirationTime, it is the end of the session and the front-end can desplay the satisfaction survey. - When session ends, call SetConversationLog API to inform the platform and write the satisfaction evaluation.

note

If the SetConversationLog API is not called, the session will automatically terminates when the session expiration time is cleared.

Config in Web Widget

The Web Widget channel provided by the platform has preset session satisfaction evaluation capability. For more details, see Web Widget-Satisfaction Survey.

Benefits of Satisfaction Survey

Satisfaction survey is an opportunity to collect insightful feedback from real User, who can maximize their help to improve the business. Although agent can not solve all the problems, finding and solving problems in time is an important part of the sustainable development and ability growth of agent.

Through Skill Satisfaction investigation, trainers can intuitively find problems that are not easy to detect in the dialogue agent, and further improve the answer and Process interaction of the dialogue agent, so as to improve the dialogue ability of the agent and increase the problem solving rate and user experience. It is difficult to view and analyze the scores in isolation. However, the changes of the score can reflect whether agent are getting better and better.

Setting up and managing this feedback itself also shows customers that you care about their feedback and want to improve, and actively express to users that you want to get feedback to optimize your demands, so that users can feel the temperature behind the agent.