UI image automation

In the previous chapter , We talked about "UI elements" and how to select a UI elements as a target to use "UI elements automation". Of course, not all cases can accurately find the appropriate UI elements as the target. Therefore, we need to learn to use "UI image automation" .

Why can't I use UI elements?

In the previous chapter It is mentioned that when we search for and operate UI elements, we are actually calling the interfaces provided by the software where the UI elements are located. What Laiye Automation Platform does is actually unify these different kinds of interfaces, so that those who write process do not need to pay attention to these details. However, there will still be some software that does not provide us with an interface to find and operate UI elements; Or, although the interface is provided, it is closed at the time of final release. These software include:

- Virtual machines and remote desktops

Including Citrix, VMware, Hyper-V, VirtualBox and remote desktop (RDP) Etc. These programs are run by a separate operating system, which is completely isolated from the operating system of Laiye Automation Platform. Laiye Automation Platform naturally cannot operate the UI elements in another operating system.

Of course, if conditions permit, Laiye Automation Platform and the software involved in the process can be installed in virtual machines or remote computers. In this way, the interfaces provided by these software can be directly used by Laiye Automation Platform, because they still run in the same operating system, and the local computer only functions as a display.

- DirectUI based software

In the past, the development framework of Windows software interface was provided by Microsoft, including MFC, WTL, WinForm, WPF, etc. Microsof thet is very considerate in providing automatic operation interfaces for UI produced by these frameworks. In recent years, in order to make the software UI more beautiful and easier to make, many manufacturers or development teams have launched their own Windows software UI development framework. Such frameworks are collectively referred to as DirectUI. The UI elements of the UI made with these frameworks are "drawn". Although human eyes can see them, the operating system and other programs do not know where the UI elements are. Some DirectUI frameworks provide external interfaces to find UI elements, while others do not provide such interfaces at all. Other programs, including Laiye Automation Platform, naturally cannot find UI elements.

In fact, the UI of Laiye Automation Platform itself is developed with a DirectUI framework called Electron . Electron actually provides a search interface for UI elements, but all externally released versions are closed by default. Therefore, careful readers may find that the UI elements in Laiye Automation Platform are not found in any RPA platform on the market.

- Game

Since the UI of the game emphasizes aesthetics and personalization, the UI elements of general games are "drawn", which is similar to DirectUI in principle. This UI usually does not provide an interface to tell us the location of UI elements. Unlike the software based on DirectUI, the game UI changes quickly and requires higher timeliness. Generally speaking, the RPA platform is not optimized for the game, so the effect of using it in the game will not be very good.

If you want to use automatic operation in the game, it is recommended to use Quick Macro. The Quick Macro is specially designed for the game, with many built-in UI search methods for the game, such as single point color comparison, multi-point color comparison, image search and so on. And the operation efficiency is higher.

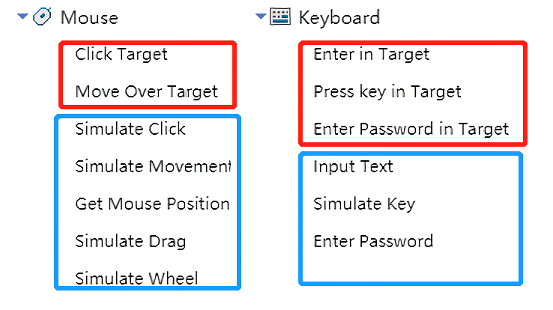

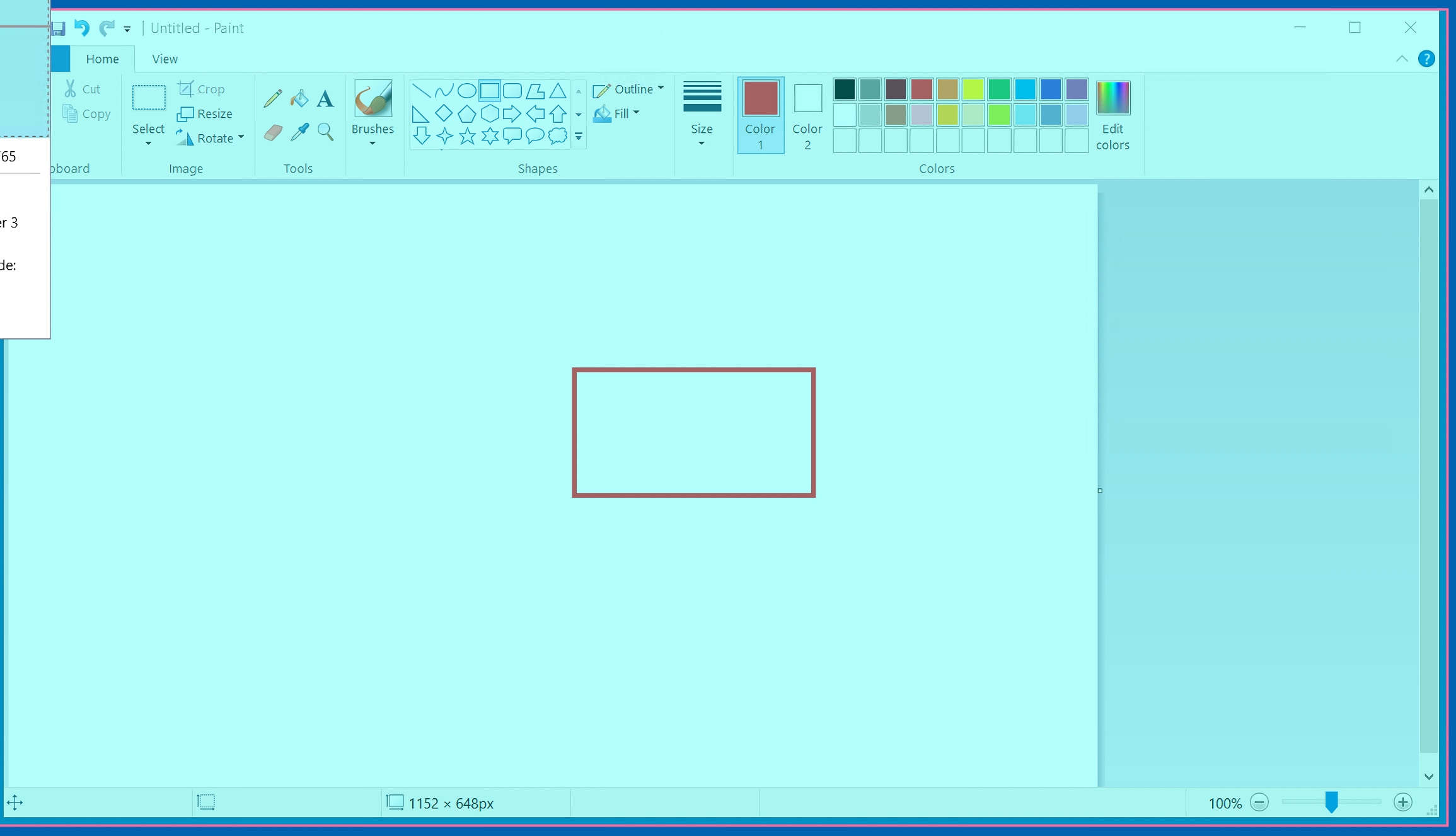

Targetless command

We are Previous chapter The command of "targeted" is introduced in, and Laiye Automation Platform also has the command of "targetless". As shown in the figure below, the red box indicates the command with a target, and the blue box indicates the command without a target.

If you encounter Windows software with no UI elements as the target, the "targeted command" can no longer be used, but the "targetless command" can still be used. Among these commands without targets in the figure, the most important one is "simulated movement", because "simulated movement" requires us to specify a coordinate point in the command. When executing this command, the mouse pointer will also move to this coordinate point; After moving, we use the "simulate clicking" command to simulate pressing the left button, so as to correctly press a button; Or Settings the focus on an input box correctly, and then use the "input text" command to input a piece of text in the input box where the focus is located.

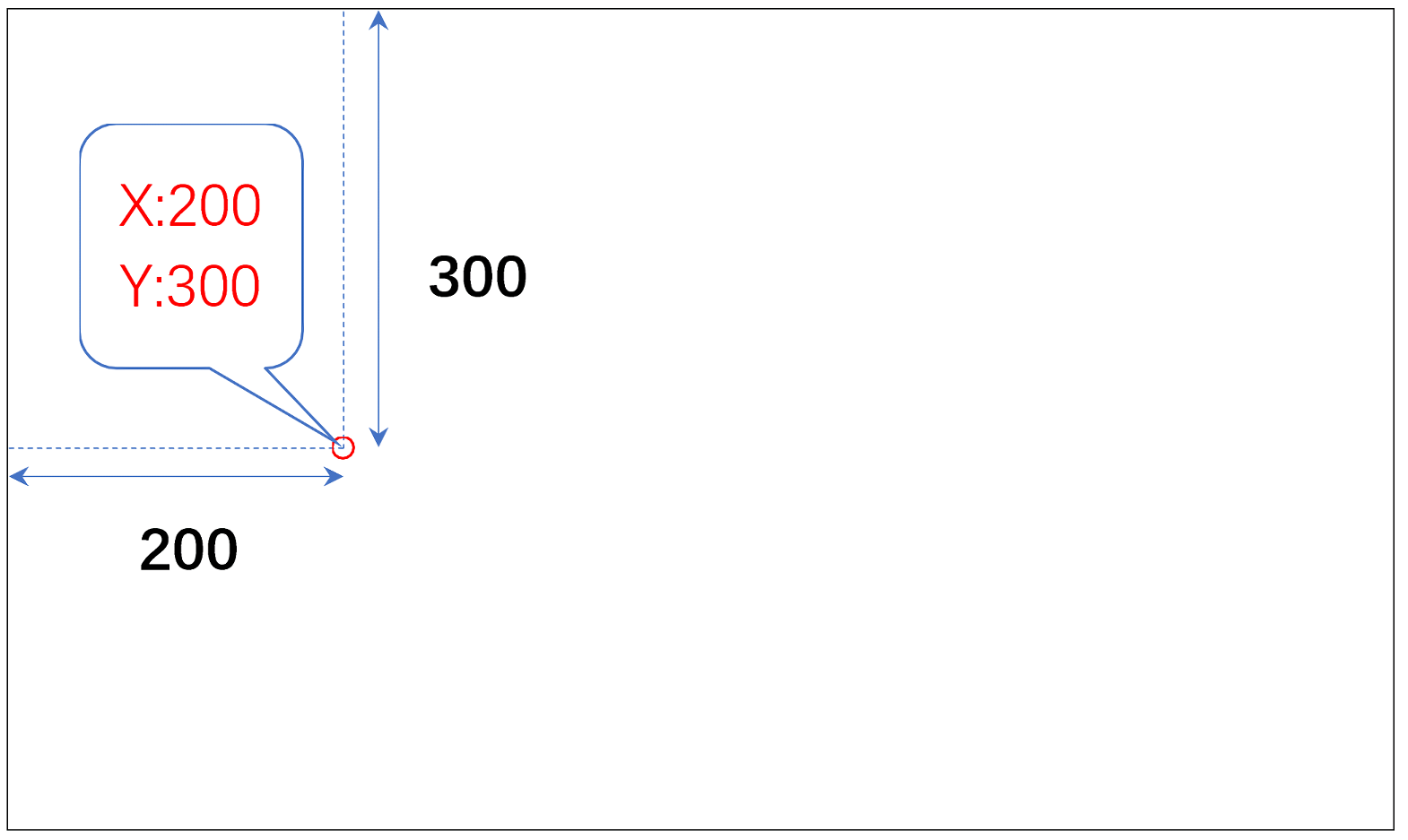

For example, there is an input box, where the coordinates are X: 200 and Y: 300. Then we need to use "simulation movement" first and set the coordinates of the movement as X: 200 and Y: 300; Press the left button again with "simulation click" to Settings the focus; Then use "input text" to input normally. Otherwise, if you use "input text" directly, it is very likely that it will be input into other input boxes.

Here, we need to explain the screen coordinate system of the Windows operating system first. If you know the screen coordinate system of Windows before, you can skip this paragraph.

In the Windows operating system, every point on the screen has a unique coordinate, which is composed of two integers, one is called X and the other is called y. For example, the coordinates x: 200 and Y: 300 mean that the x value and y value of the coordinates of this point are 200 and 300 respectively. X is calculated from 0 on the left side of the screen, 0, 1, 2, 3... From left to right, and so on. Y is calculated from 0 on the top of the screen, 0, 1, 2, 3... From top to bottom, and so on. Therefore, the positions of the points corresponding to coordinates x: 200 and Y: 300 are roughly as shown in the red circle in the following figure:

As long as there are two integer values of X and y, the position of a point on the screen can be determined. In Laiye Automation Platform, there are some commands that can obtain the position of a certain point on the screen and output it to a variable. How to use a variable to save the values of X and y? When we learn the BotScript language used by Laiye Automation Platform later, we will understand that there is a "dictionary" data type in BotScript, which can save multiple values. Therefore, when Laiye Automation Platform outputs the position of a point, it will output it to a dictionary type variable. Suppose this variable is named pnt , Then use pnt["x"] and pnt["y"] The X and Y values of the coordinates can be obtained.

If the UI elements we are looking for is in a fixed position on the screen, then the normal simulation operation can be achieved with the fixed coordinates and the targetless command. However, this situation is often rare, because Windows is a multi window system, and the position of each window can be dragged, resulting in changes in the position of UI elements in the window. Moreover, in software such as wechat, the location of contacts is not fixed, but sorted according to the time of the latest contact, and the location may change at any time.

Therefore, in Laiye Automation Platform, it is generally not recommended to write fixed coordinates directly, because there are too many changes, and it is difficult to consider them comprehensively. Generally, if you use targetless commands, you need to use them with other commands, so that other commands can find the coordinates of UI elements according to certain characteristics, and then transfer the found coordinates to these targetless commands as variables.

In Laiye Automation Platform, the best partner of targetless command is image command. Only when the two are used together can "UI image automation" be realized.

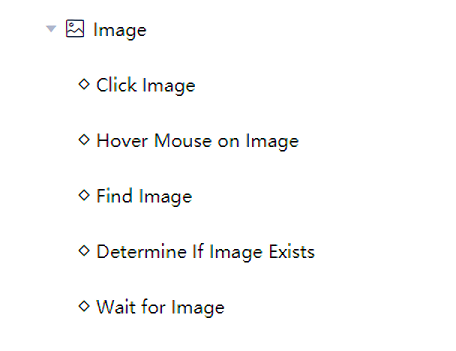

Image command

In addition to the commonly used "mouse" and "keyboard" classes, Laiye Automation Platform's "image" commands are also very powerful. In the command area of "Laiye Automation Creator", find "image" and click to expand it. You can see that it contains several commands as shown in the following figure:

Let's first look at the command "find image". Its function is to first specify an image file in the format of BMP, PNG, JPG, etc. (it is recommended to use PNG format because it is lossless compression), and then scan the specified area on the screen in order from left to right and from top to bottom to see if the image appears in the specified area. If it does, save its coordinate value in a variable, otherwise an exception will occur.

It seems to be very complicated. You have to specify the image file and the scanning area. In fact, with Laiye Automation Platform, the operation is very simple.

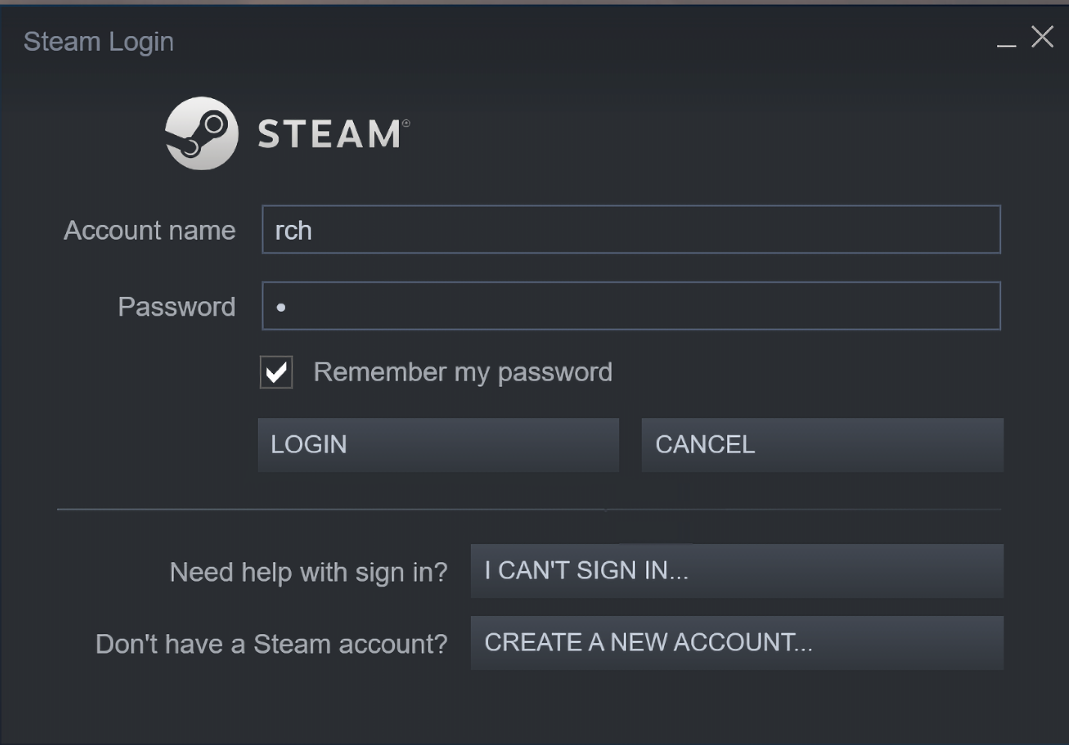

For example, the famous game platform Steam uses DirectUI technology in its UI. We take its login dialog box as an example (as shown in the figure below). The account input box, password input box, login button and other elements can not be directly obtained by any RPA tool. At this time, you need to use the image command.

It is assumed that Steam has been started and its login UI has been opened, and Steam has automatically saved a valid user name and password. Just click the "login" button. Next, edit a process block in the "Laiye Automation Creator" and insert a "find image" command by double clicking or dragging. Click the "unspecified" button on the command and select "On the UI" in the pop-up menu:

Similar to targeted commands, the UI of "Laiye Automation Creator" will be temporarily hidden, and the icon will become an arrow and a picture. At this time, press the left button and drag to the right and down until a blue frame is drawn, and the image you want to find is already included in the blue frame. Release the left mouse button and you are done!

It seems that the above operation only draws a blue frame, but the "Laiye Automation Creator" has already done two things for us:

Judge which window the blue frame falls on, and record the characteristics of this window. When looking for a picture in the future, you also need to find this window first and look for a picture within the scope of this window.

The screenshot of the part framed by the blue frame is automatically saved as a PNG file, and the file is automatically saved in the res subdirectory of the directory where the process is currently written. This is the picture to find in the future.

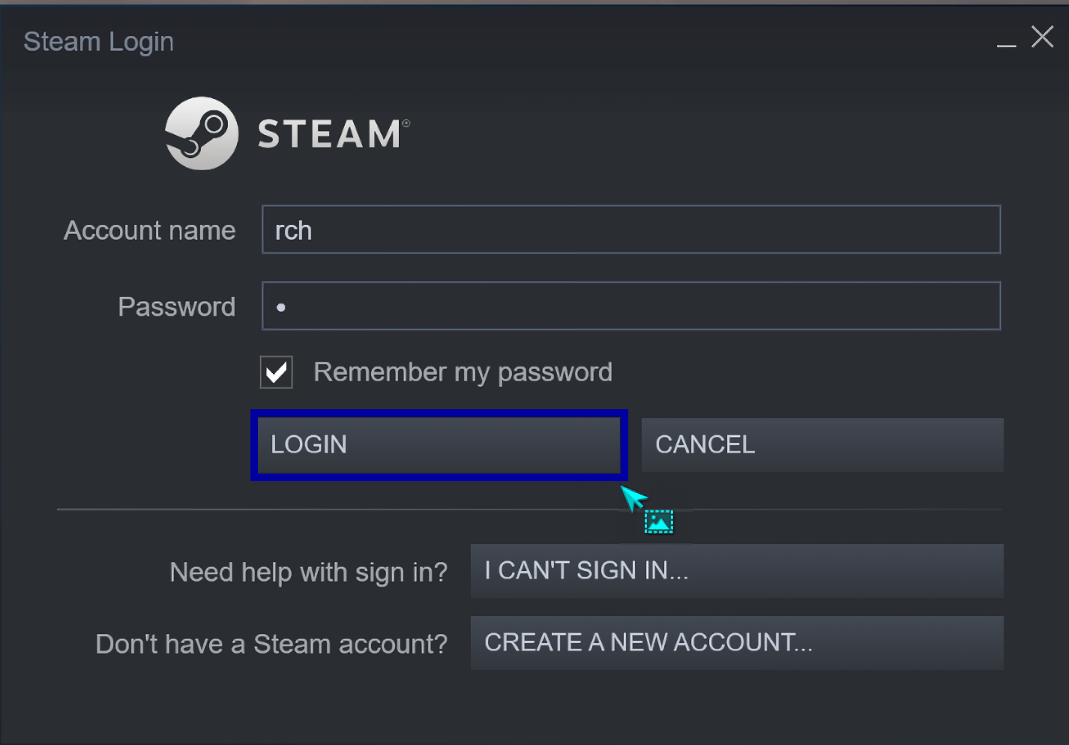

Click the "find image" command to highlight it. The attribute bar on the right will display the attribute of the command, as shown in the following figure:

Among them, the two attribute with red boxes and the two most important attribute are the two things that the "Laiye Automation Creator" has done for us as mentioned above. In other attribute, "similarity" is a number between 0 and 1, which can contain decimal places. The closer this number is to 1, the more strict it is that every point must be matched when searching for an image. Usually, it takes 0.9, which means that a small number of mismatches are allowed, as long as they are roughly matched. The meaning of "cursor position" attribute is that when an image is found, because the image is a rectangle, and the command output is only the coordinates of a point, the coordinates of which point in the rectangle should be returned are usually "center". The "active window" attribute indicates whether the window to be found needs to be displayed in the foreground before finding a picture. If the window is covered, even if there is the image we are looking for on the window, it cannot be found correctly, so this attribute is usually set to "yes".

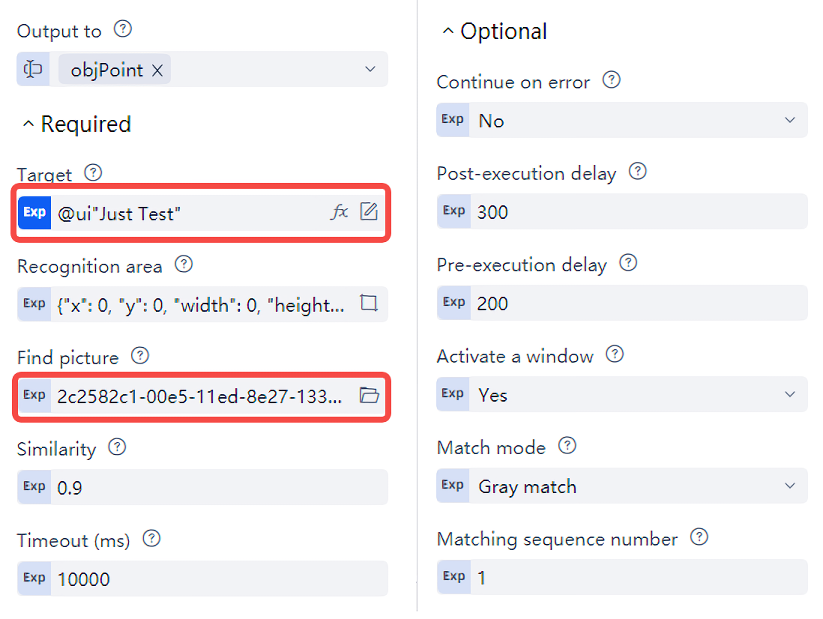

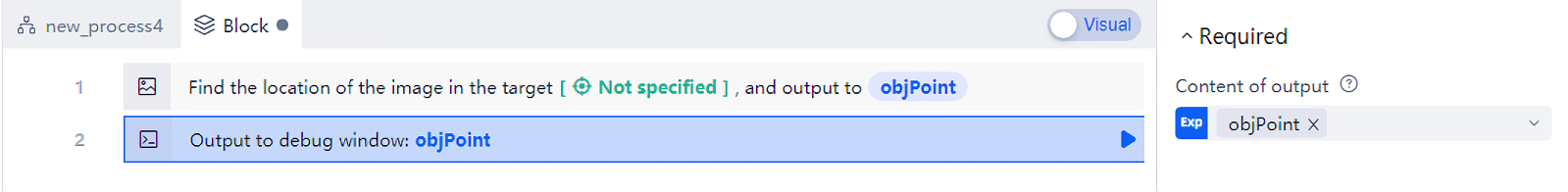

Other attribute do not need to be changed. It is better to keep the default value. In the "output to" attribute, a variable named objpoint has been specified. If the image is found successfully, the result will be saved in this variable. Let's take a look at what is stored in this variable: find "output debugging information" in the "basic command" class of the command area, insert it after the "find image" command, and specify the output content as objpoint in the attribute (note that at this time, the output marked with "Exp" The button of the is switched to blue, indicating that this is the "Expert Mode" in which variables and expressions can be input, then enter the variable name objpoint, or press "fx" Button to directly select a variable). As shown in the figure:

Suppose that the image you want to find can be seen on the screen. After running this process block, you will get the result:

{ "x" : 116, "y" : 235 }

The specific value may be different on different computers, but the principle remains the same. This value is a "dictionary" data type. When this value is saved in the variable objpoint, you only need to write objPoint["x"] And objPoint["y"] The X and Y values can be obtained.

Next, the center position of the image is obtained. Just click this position with the mouse to simulate the login operation of Steam. Select the "simulate movement" and "simulate click" commands contained in the "mouse" class to complete the Task well.

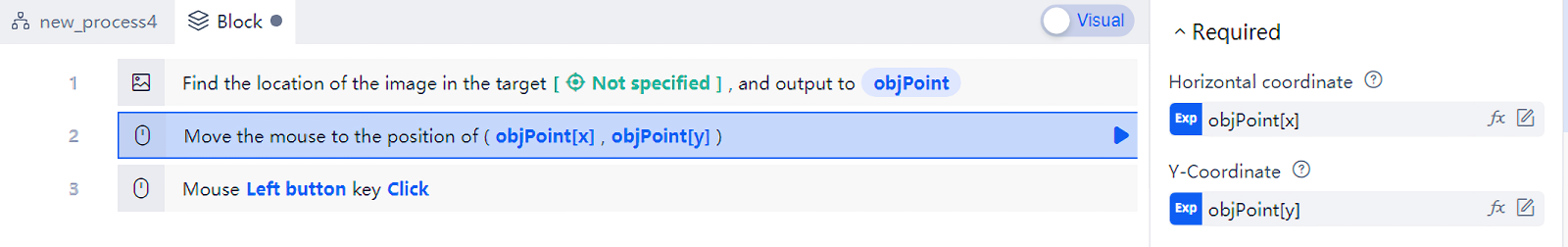

As shown in the following figure, the most critical attribute of the "simulate movement" command is the screen position to be operated. Switch the "abscissa" and "ordinate" attributes to "Expert Mode" respectively, and input the results of finding images in turn objPoint["x"] And objPoint["y"] That's all. After the move is completed, do another "simulation click", and let the left button go down at the center of the login button. So far, we have simulated the complete operation of clicking the "login" button.

The above three commands are easy to understand, and even users who have never studied Laiye Automation Platform can roughly understand their meaning. However, just to click a login button, it needs three commands to complete, which is obviously too complicated. At this time, please look back at all the commands under the "image" class provided by Laiye Automation Platform. The first command is called "click image", which is actually a combination of "find image", "simulate movement" and "simulate click". Just insert a "click image" command, press the "On the UI" button on the command, drag the mouse to select the window to be found and the image to be found, You can quickly simulate the function of clicking the "login" button of Steam. Although it is a command without a target, its operation convenience is not inferior to that of a command with a target.

With the above foundation, you can draw inferences about other image commands, such as "move the mouse over the image" and "judge whether the image exists", which will not be repeated in this article.

Practical skills

In the previous chapter, we learned the automation of UI elements, and in this chapter, we learned the automation of UI image. We can see that in most cases, we can't simply use "targetless command", but we need to dynamically find the position to be operated on the screen in combination with image commands, so we call it "UI image automation".

Then, when specifically completing a process Task, should UI elements automation or UI image automation be used first? The answer we give is: give priority to automation of UI elements! As long as appropriate UI elements can be obtained as the target, priority should be given to using UI elements. The use of UI image automation has the following disadvantages:

- The speed is usually slower than the automation of UI elements;

- It may be affected by occlusion. When an image is occluded, even if only a part of it is occluded, it may be greatly affected;

- It often needs to rely on image files, and once the image files are lost, they can not operate normally;

- Some special image commands must be connected to the Internet to run.

Of course, these shortcomings can be partially alleviated. The following tips can help you better use image commands:

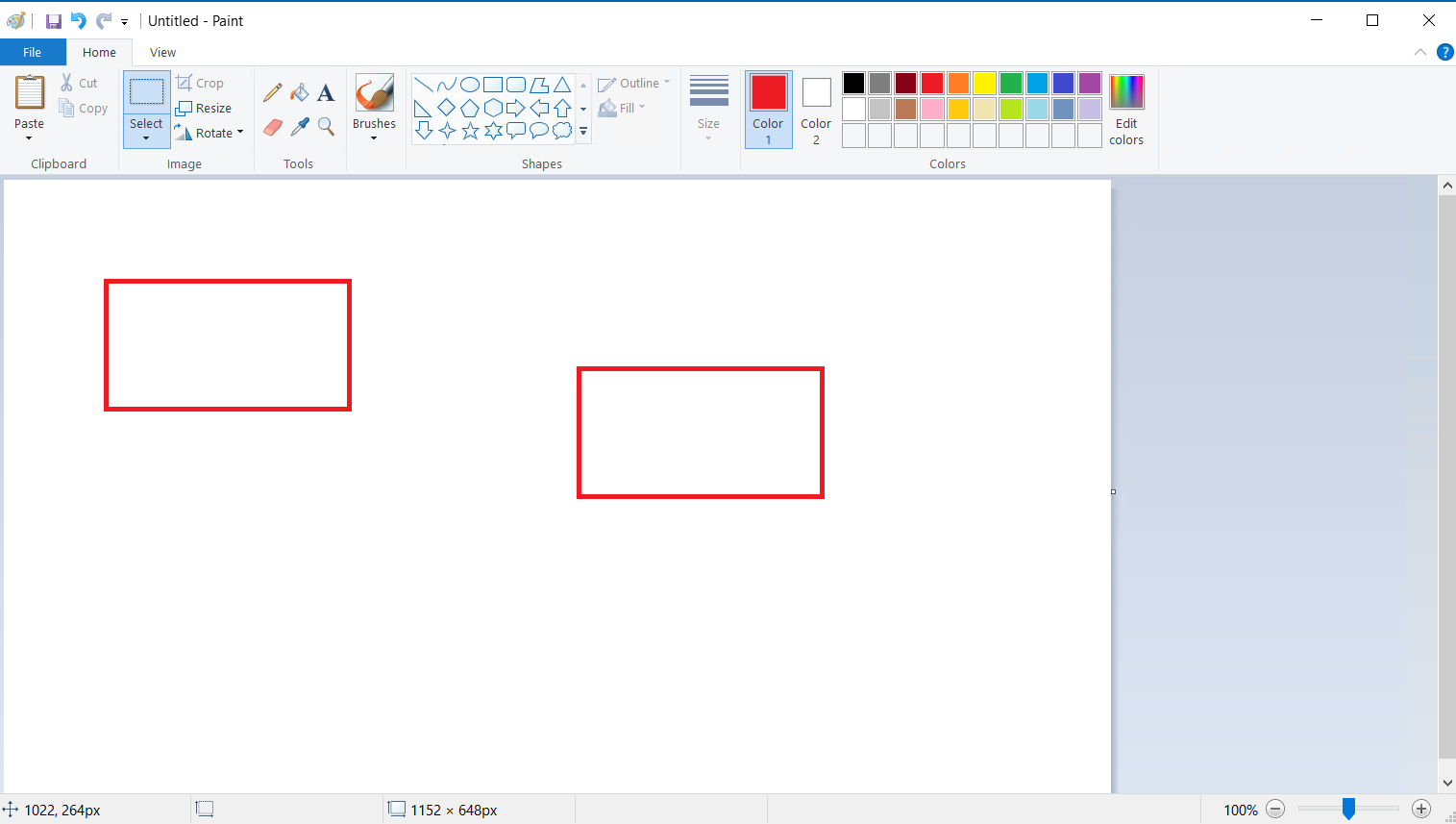

First, please remember the word "small". When taking screenshots, try to take smaller images as long as they can express the basic features of the UI elements being operated. When specifying the search area, try to reduce the area. This will not only improve the speed, but also make it less susceptible to occlusion. For example, for the "login" button in the figure below, it is not necessary to look up the entire button as an image as in the left figure. Just select the most critical part as in the right figure.

Secondly, most image commands support the attribute of "similarity". The initial value of this attribute is 0.9. If the Settings is too low, it may cause "wrong selection". If the Settings is too high, it may cause "missed selection" (please refer to the concepts of "wrong selection" and "missed selection Previous chapter ). It can be adjusted according to the actual situation, and its effect can be tested to select the best similarity.

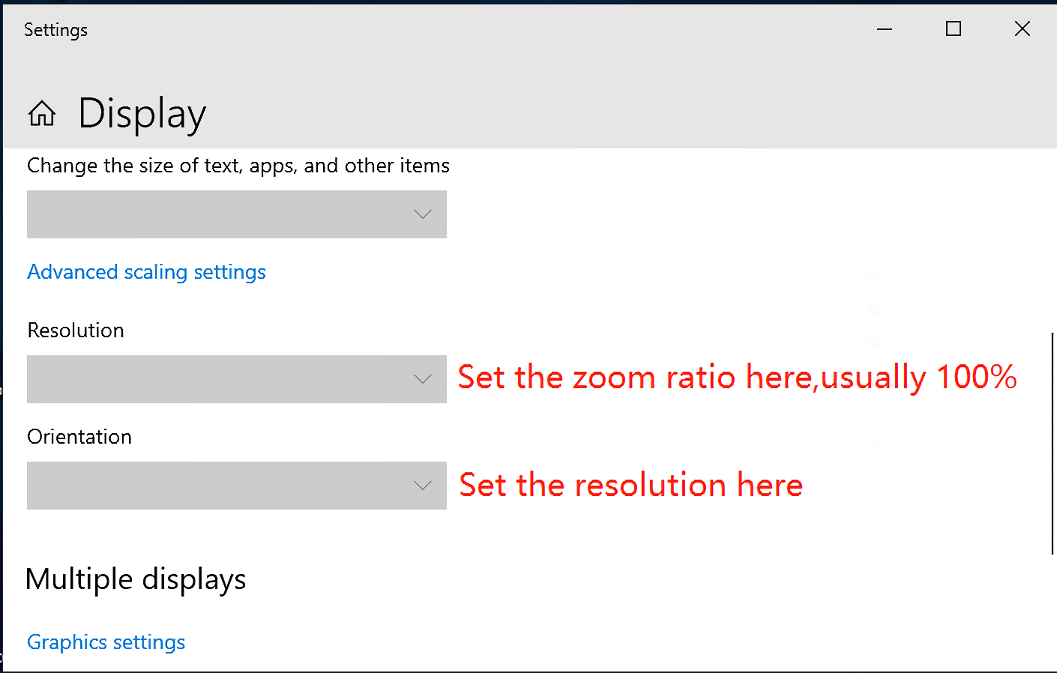

Thirdly, the resolution of the screen and the scale of the screen may have a very critical impact on the image command. Because the UI display of the software may be completely different under different resolutions, resulting in image command failure. Therefore, please try to keep the resolution and scaling of the computer running the process and the computer developing the process consistent. The interface for Settings resolution and scaling on the Windows 10 operating system is shown in the following figure:

Finally, for image commands, it is often necessary to deal with image files. When we need to use image files, we can certainly use an absolute path to test, such as D:\1.png . However, this requires that there must be the same file in the same path on the computer running this process, otherwise an error will occur. One way to improve this is to see a folder named res in the folder where your process is located, put images or other files in this folder, and use expressions in the process @res"1.png" To represent this file. In this way, when the current process is released for use by the "Laiye Automation Worker", this file will also be automatically brought. No matter where the "Laiye Automation Worker" puts the process, it will automatically modify it @res The path represented by the prefix is always valid.

In addition, it should be noted that most of the techniques for using image commands described in this chapter are also applicable to OCR commands. The concept and use method of OCR commands are not repeated in this chapter due to space constraints.

Intelligent recognition

As mentioned above, Applications such as virtual machines, remote desktops, DirectUI based software and games cannot directly locate UI elements using the "On the UI" function of targeted commands. In this case, only the targetless command and the image command can be used. However, some skills of the image command are not easy to master. If they are not mastered well, it is very easy to make "wrong selection" or "missing selection". For this reason, Laiye Automation Platform has designed a set of intelligent recognition functions, which is another method of positioning UI elements based on images. Let's look at the usage of intelligent recognition from a specific instance.

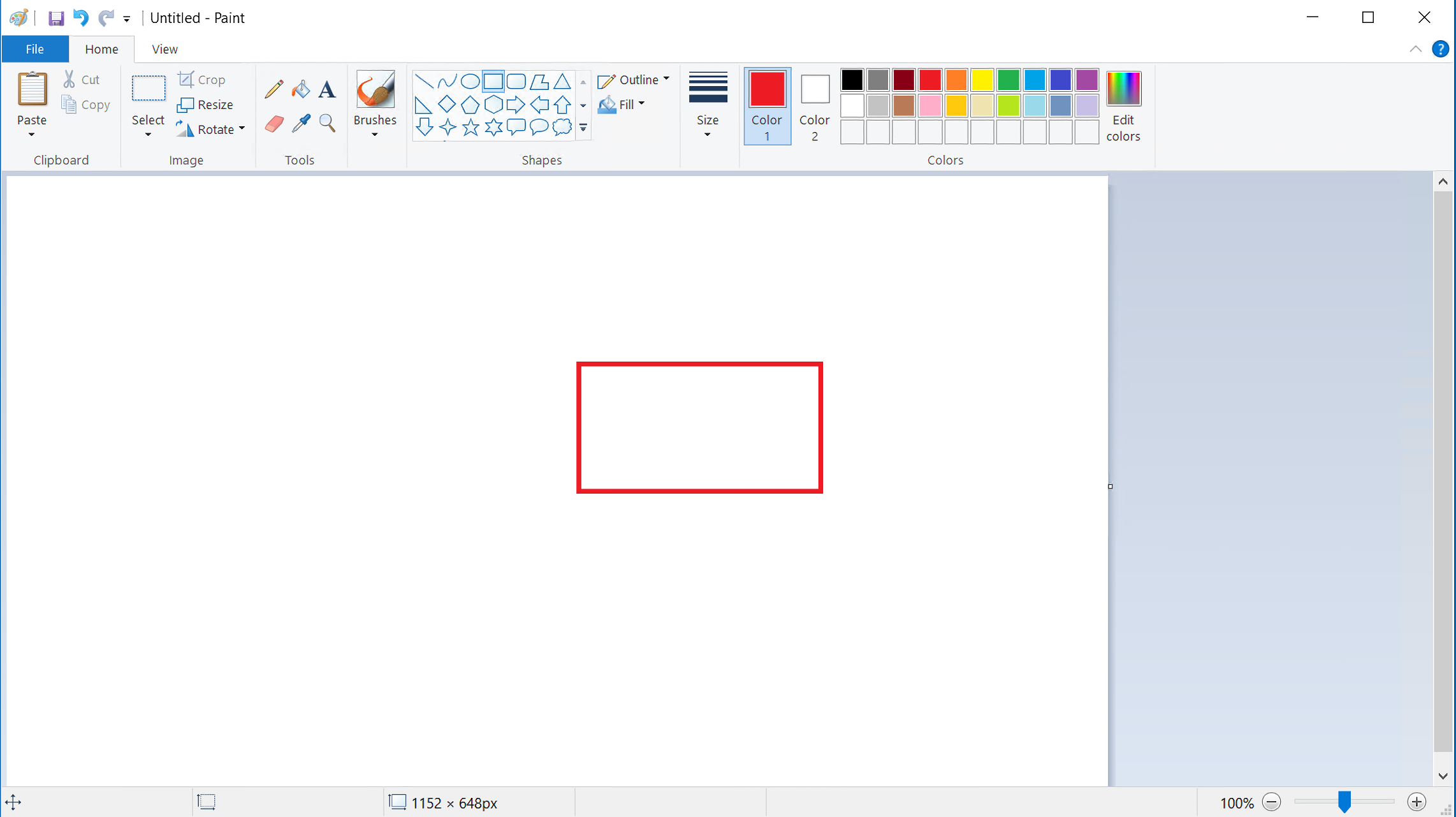

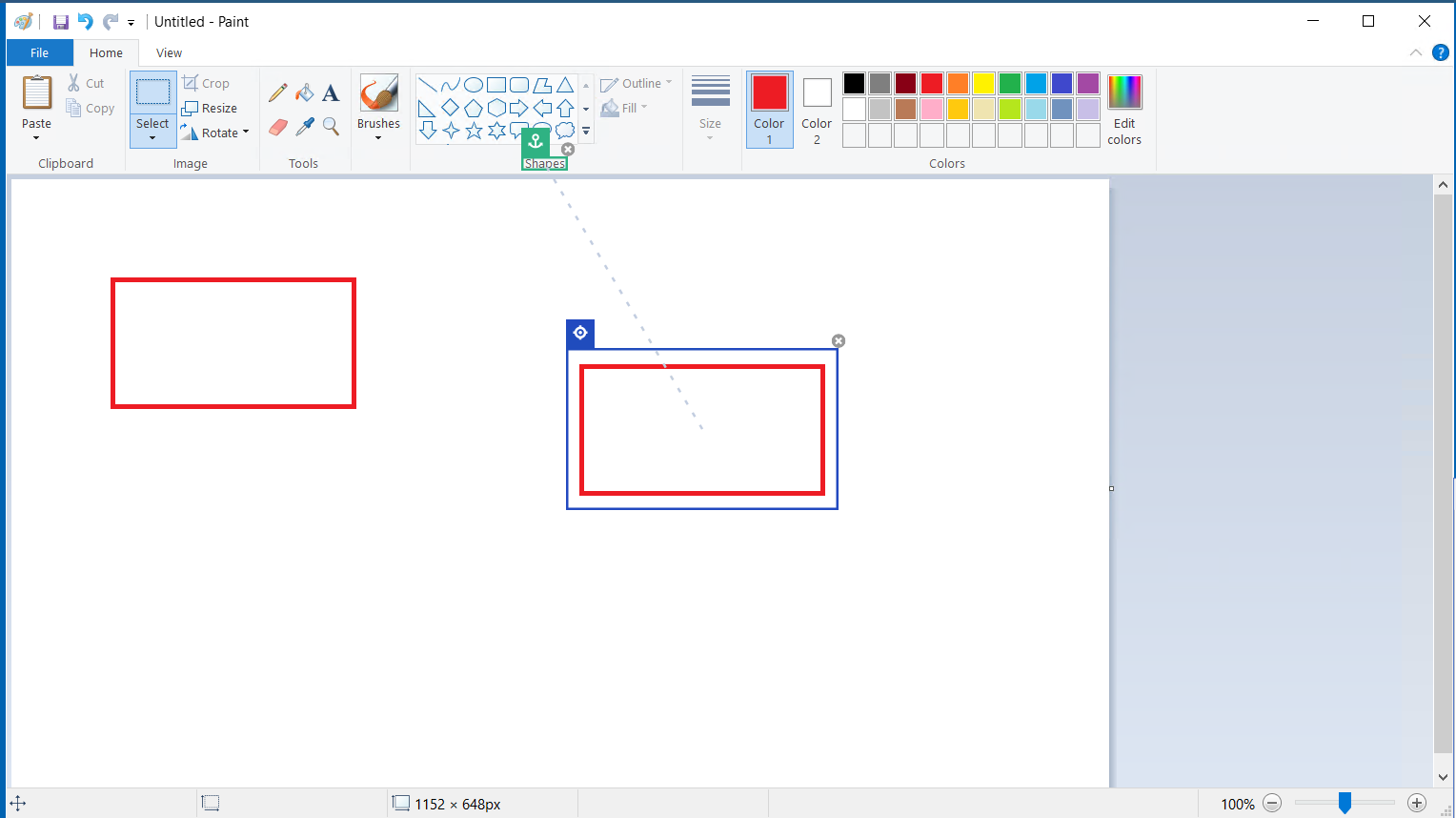

Open the drawing program that comes with Windows and draw a rectangular box. Suppose that the demand at this time is: find and click the rectangular box through Laiye Automation Platform.

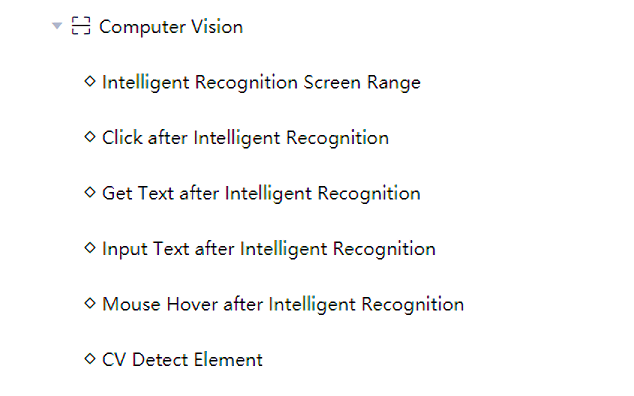

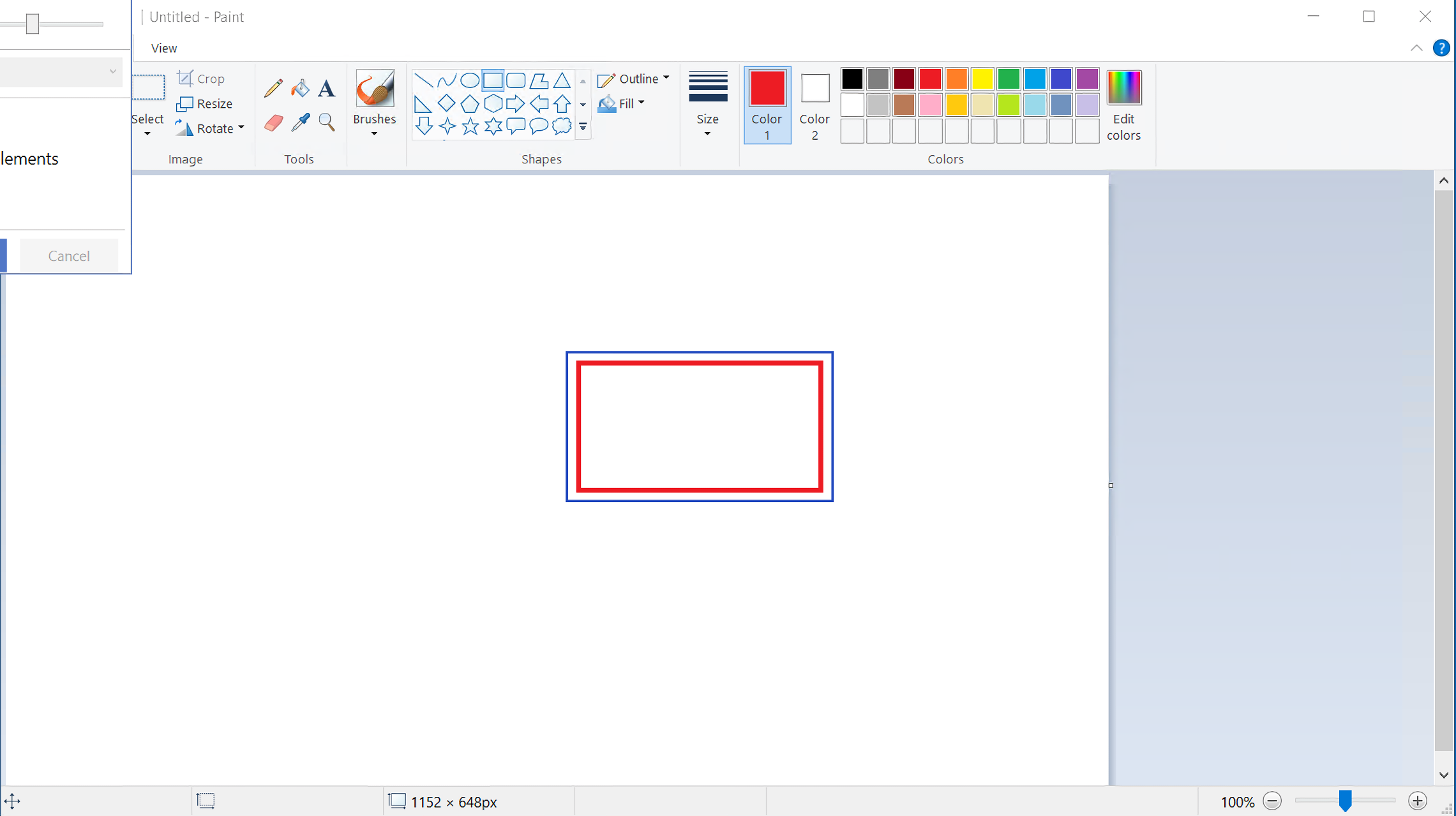

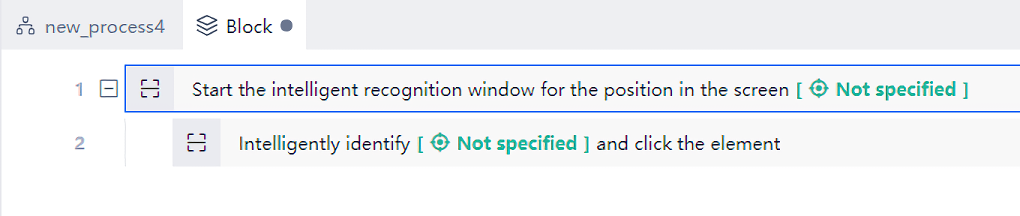

As mentioned above, the targeted command cannot find this rectangular box. Let's see how to find and click this rectangular box through the intelligent recognition command. In the command area of "Laiye Automation Creator", find "UI operation", click expand, find "intelligent recognition", and then click expand to see a group of commands as shown in the following figure:

First insert a command of "intelligent recognition of screen range", and then click the "Selector" button on this command. The UI of "Laiye Automation Creator" is temporarily hidden, and a translucent mask with red edge and blue background appears. The target selector will appear wherever the mouse moves. Careful readers have found that this function is the same as the "On the UI" button of targeted command!

After selecting the screen, insert a "click after intelligent recognition" command, and then click the "Selector" button on this command. The usage of the "Selector" button here is still the same as that of the "On the UI" button of the targeted command. At this time, a magical phenomenon appeared: Laiye Automation Platform actually recognized a rectangular box we drew just now!

That is to say, with the command of "intelligent recognition screen range", Laiye Automation Platform can extract potential elements from the UI through artificial intelligence image recognition, which could not be recognized before, and use them for subsequent commands, including "click after intelligent recognition", "get text after intelligent recognition", "input text after intelligent recognition", "mouse hover after intelligent recognition" "Judge whether an element exists after intelligent recognition" and other commands. From this perspective, it can also be understood that commands such as "click after intelligent recognition" must be executed after the "intelligent recognition screen range" command, and must be valid within the range of the "intelligent recognition screen range" command (within the indented range of the "intelligent recognition screen range" command).

Run the process, and you can see that the rectangular box is clicked successfully.

If there are two or more UI elements with the same appearance in the user UI, how can Laiye Automation Platform locate the UI elements we want to find? Let's explain it through a specific instance: open the drawing program and copy the rectangular box just drawn. In this way, there will be two identical rectangular boxes in the drawing UI at the same time. Suppose that the current demand is to find and click the rectangular box on the right through Laiye Automation Platform.

At this time, it is necessary to click the "Selector" button of the "intelligent recognition screen range" command again. Because the screen content to be recognized has changed, it is necessary to perform intelligent recognition on the screen again. From this point of view, the command of "intelligent recognition of screen range" is actually a static command executed in advance, rather than a dynamic Selector during the operation of the process. Therefore, once there is a change in the screen image, it is necessary to re intelligently recognize the screen image.

Then, click the "Selector" button of the "click after intelligent recognition" command. At this time, we can find that both rectangular boxes are selectable. We select the right rectangular box. At this time, the right rectangular box is covered by the mask box. At the same time, there is a dashed line connecting the right rectangular box with a "shape", as shown in the following figure:

Originally, Laiye Automation Platform used a technology called "anchor point" to locate two or more elements with the same appearance. The so-called "anchor point" refers to a unique element in the screen (such as the above-mentioned "shape"), which can uniquely position the rectangular box by using the position offset and azimuth angle of different rectangular boxes relative to the anchor point.

After running the process, you can see that you have successfully clicked the rectangular box on the right.