Data Processing

Data is an inevitable product of information development. Collecting, collating, processing, and analyzing data is an indispensable part of the RPA process. This chapter takes the data processing sequence as the main line, and introduces the processes of data acquisition, data reading, data processing, and data storage. It covers different data formats such as web page data, application data, file data, JSON, and string, and also covers various data processing methods such as expressions, collections, and arrays.

Data Acquisition Method

Web Data Scraping

Web page data, because of its diversity and real-time nature, is the most common source of data acquisition. Web data scraping is typically called "climbing data". Laiye RPA integrates the "data capture" function, which can capture web data in real-time. Since "data scraping" is commonly used, the "data scrape" function is located on the toolbar.

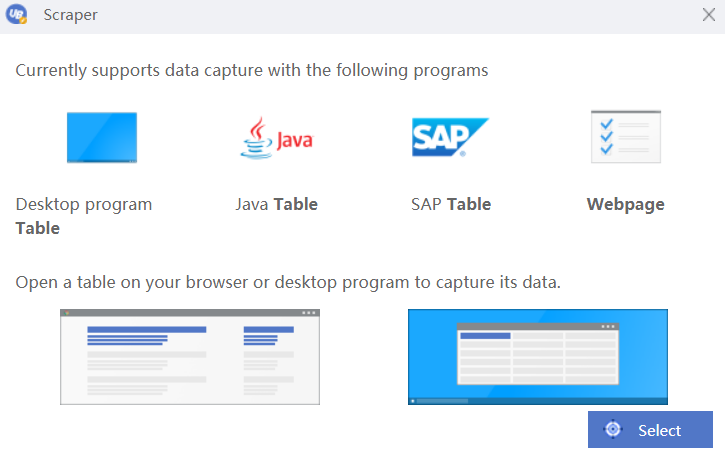

The "data capture" feature is another commonly used function. This button displays an interactive dialog box. This dialog box will guide the user to complete the web page data capture. Laiye RPA currently supports data scraping for four programs: desktop program table, Java table, sap table, and web page. Here, we will use web page data scraping as an example.

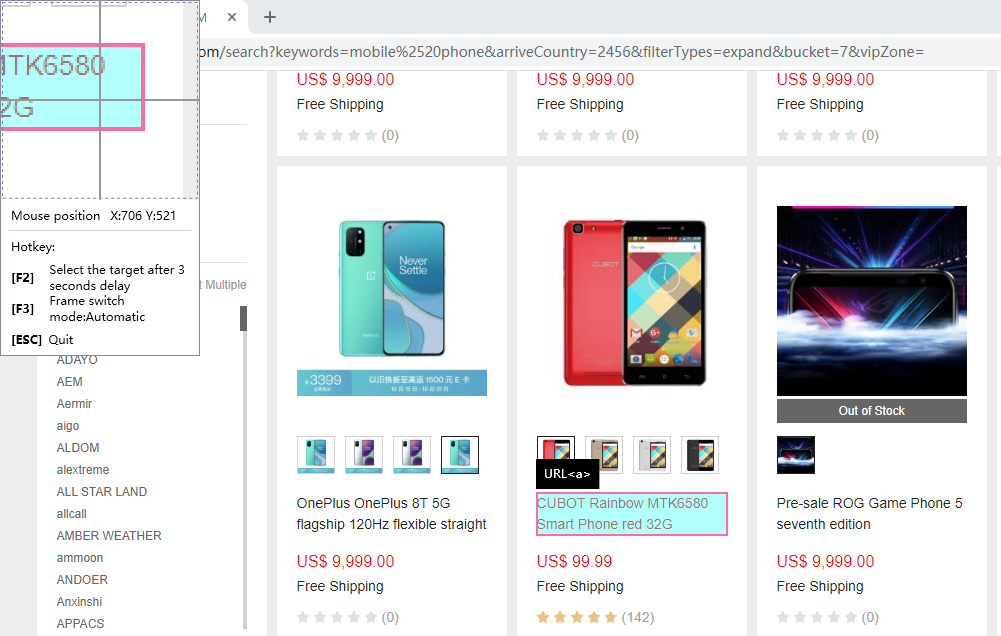

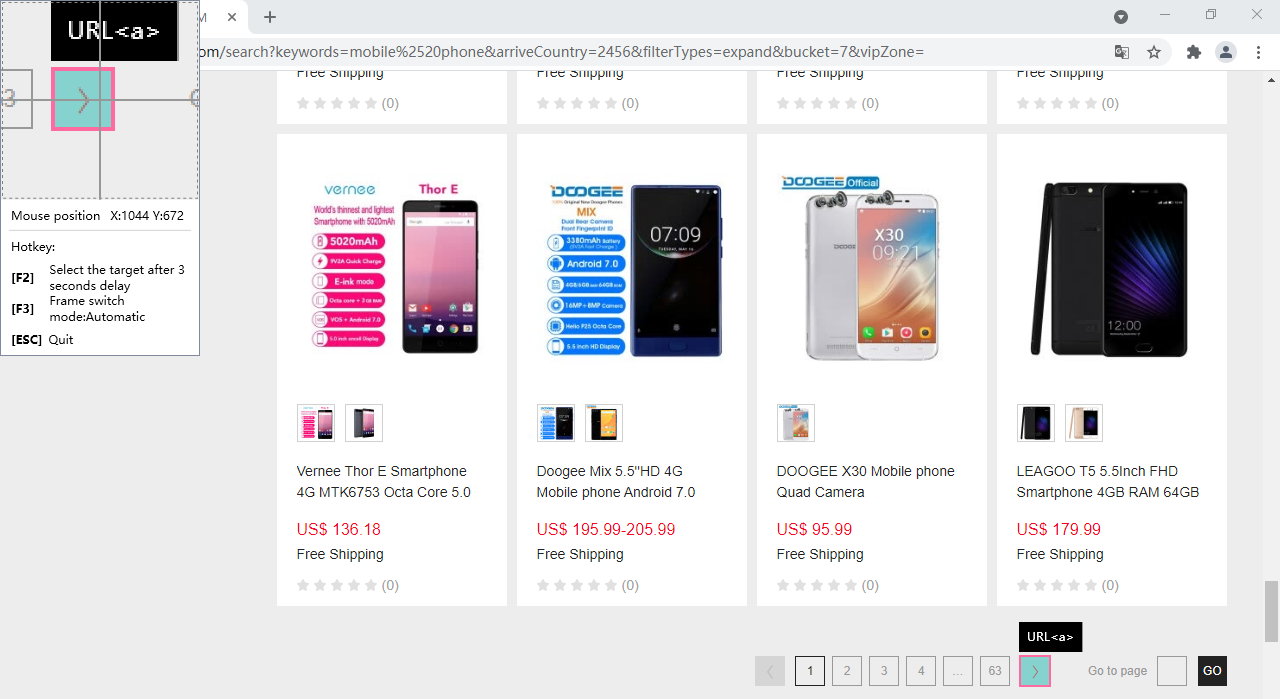

Click the "Select Target" button. The "Select Target" button is the same as the "Select Target" button in other target commands we learned earlier. It should be noted that Laiye RPA will not automatically open the web pages and pages you want to crawl, so before data crawling, you need to open the data web page or desktop program table in advance. This work can be done manually or through other Laiye RPA command combinations. For example, we will walk through how to capture mobile phone product information from a website. We use the "Start New Browser" command of "Browser Automation" to open the website on the browser, and use the "Set Element Text" command in the search bar. Then, we enter "mobile phone" and use the "click target" command to click the "search" button.

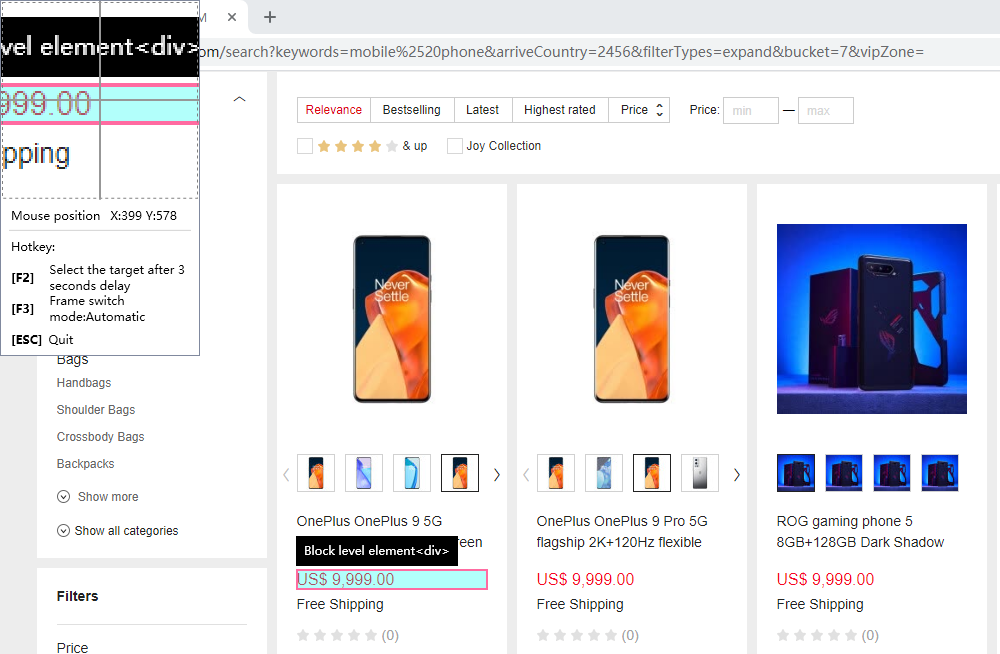

After the web page is ready, we need to locate the data in the web page. First, we capture the product name, and then carefully select the target of the product name (red frame and blue bottom mask frame).

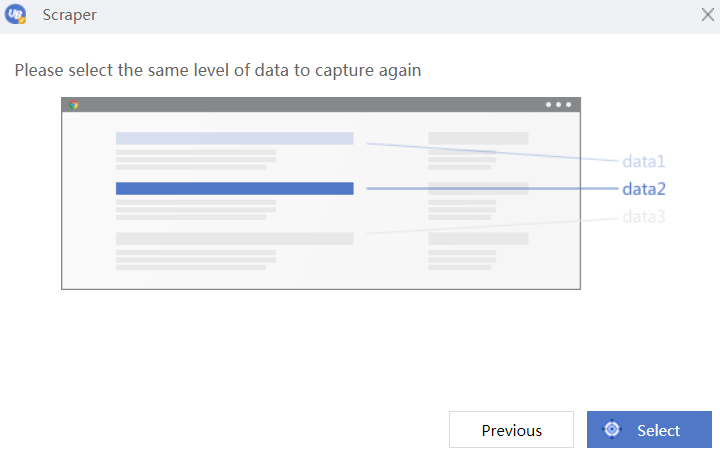

Here, Laiye RPA will display a message box: "Please select the same level of data and capture it again". This is required because what we want to capture is batch data, so we must find the common characteristics from multiple batch data. After selecting the target for the first time, we will get a feature, but we still do not know which features are common to all targets and which are the characteristics of the first target. Selecting and capturing another level of data will help Laiye RPA determine the commonality of all the targets.

To locate the data for capture, we first capture the name of the product and carefully select the target of the product name (red frame and blue bottom mask frame).

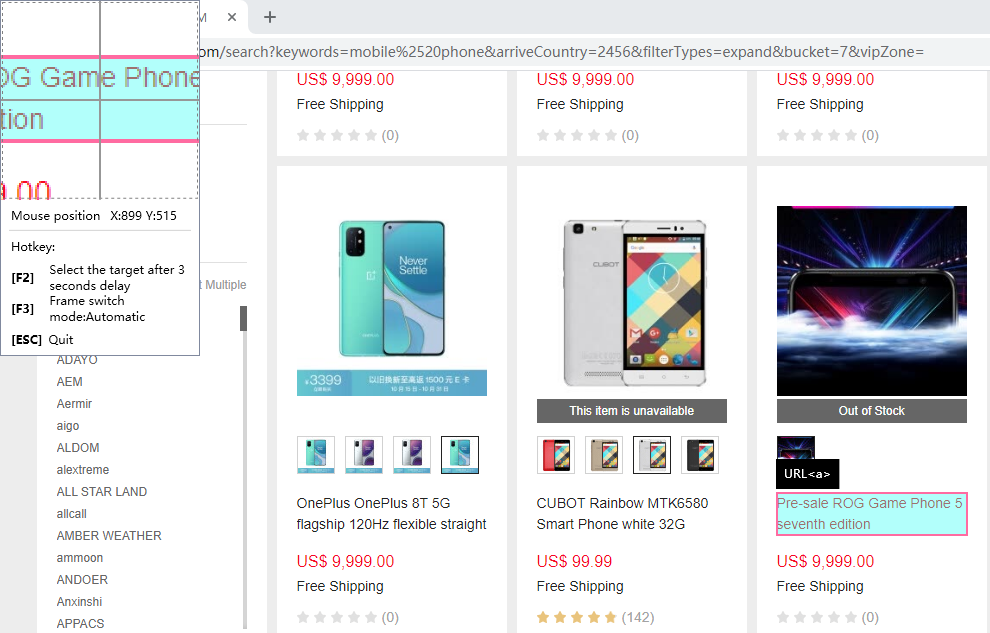

Once again, we need to locate the data that needs to be crawled on the webpage, that is, the name of the product. Since the first crawl was the name of the first product, now we crawl the name of the second product. We must carefully select the target of the product name to ensure that the second and first crawls are the same level of target, because the Web page level is sometimes particularly large, and the same text has several levels of target. Laiye RPA will help you check this. If Laiye RPA reports an error, your selection is most likely wrong. In addition, you can also choose third and fourth product names for crawling, which would not affect the data crawling results.

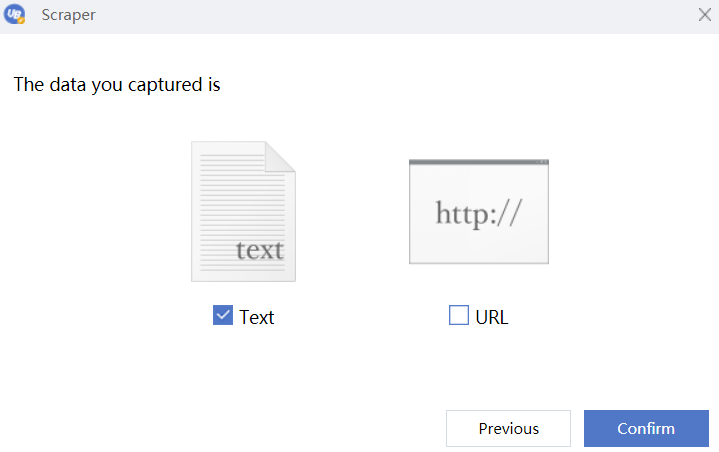

After both targets have been selected, Laiye RPA will display a message box again, asking "Would you like to capture the text or the text link?", which can be selected as needed.

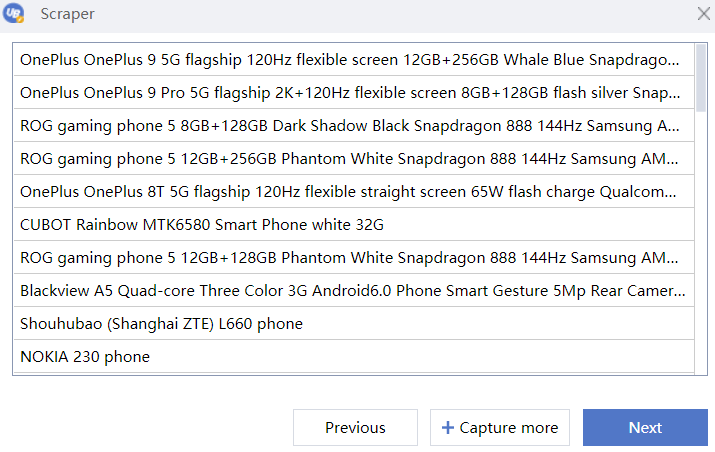

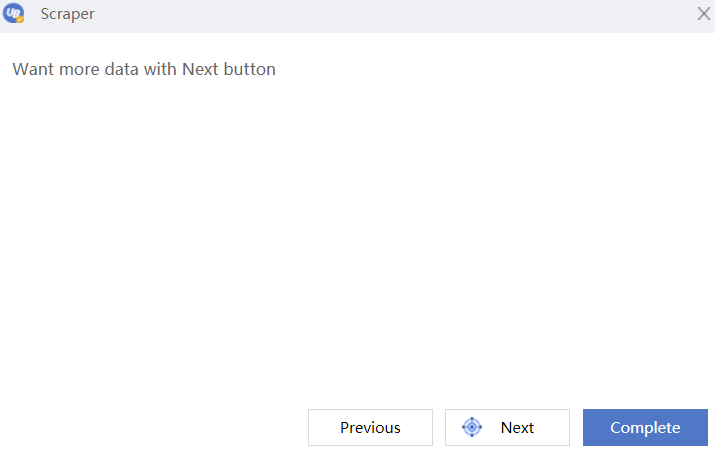

After clicking the "OK" button, Laiye RPA will display a preview of the data capture results. You can check whether the data capture results are consistent with your expectations. If they are not consistent, you can click the "Previous" button to restart the data capture. If they are consistent, and you only want to capture the "product name" data, then click the "Next" button. If you want to capture more data fields; for example, if you want to capture the product price, you can click "Fetch more data" button. Laiye RPA will pop up again to select the target interface.

This time we choose the text label of the commodity price.

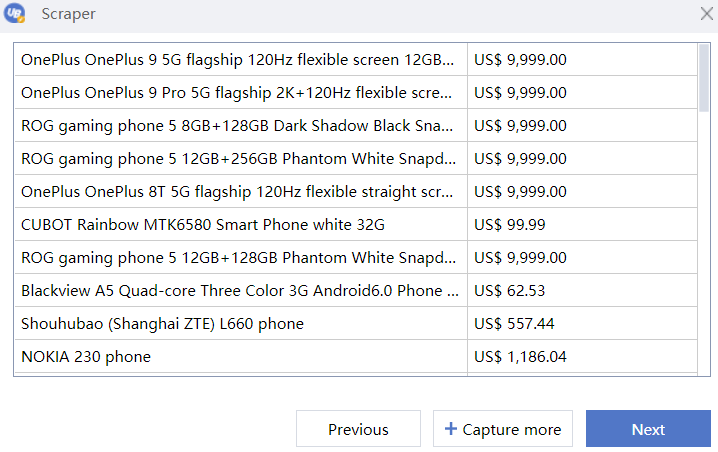

Similarly, after selecting the target twice and previewing the data crawl results again, you can see that the product name and product price have been successfully crawled.

We can reuse this method to increase the data items that we capture, such as the image address of the product or the number of reviews. If you do not need to capture more data items, then click the "Next" button. The guidance page that appears at this time will ask "Would you like to capture more data with the "Next Page" button?"

Assuming that the web page data is regarded as a two-dimensional data table, the previously detailed step is to increase the number of columns in the data table, such as product name, price, etc., and capture the navigation button to increase the number of rows in the data table. If you only want to capture the first page of data, then click the "Finish" button; if you need to capture the next few pages of data, then click the "Capture Page" button.

If you click the "Capture navigation button", a "Target Selection" guide box will pop up. Select navigation button on the Web page, where the page navigation button is the "\>" symbol button on the page.

When all the steps are completed, you can see that Laiye RPA has inserted a "data capture" command into the command assembly area, and all the attributes of the command have been filled in through the target selection wizard. For example, the content of the "target" attribute is:

{

"html": {

"attrMap": {

"id": "J_goodsList",

"tag": "DIV"

},

"index": 0,

"tagName": "DIV"

},

"wnd": [{

"app": "chrome",

"cls": "Chrome_WidgetWin_1",

"title": "*"

} , {

"cls": "Chrome_RenderWidgetHostHWND",

"title": "Chrome Legacy Window"

}]

}

Certain attributes of the "Data Capture" command can be further modified: the "Number of Captured Pages" attribute refers to how many pages of data are fetched; the "Number of Returned Results" attribute limits how many results are returned per page (-1 means there is no limit to the number); the "Page turning interval (ms)" attribute refers to how many milliseconds to turn the page (sometimes the network speed is slow, and it takes a longer interval to open the page completely).

General File Reading

In addition to web data crawling, "Files" are another very important data source. Laiye RPA provides the operation of several format files, including general files, INI format files, CSV format files, etc. Let us first look at general files.

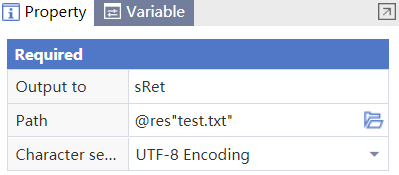

In the "General File" directory of "File Processing" in the Command Center, select and insert a "Read File" command. The command has three attributes. One is the "File path" attribute, which is to fill in the path of the file to be read. Here it is filled with @res"test.txt", which means the test.txt file under the res subdirectory of the process directory. The next is the "Character set encoding" attribute. Select "GBK encoding (ANSI)" if most of the files contain Chinese characters; selecting "UTF-8 encoding" or "UNICODE encoding" would make the Chinese garbled. The last is "output to" attribute: fill in a string variable sRet, and the contents of the read file will be saved in this variable in the form of a string.

General files can only be read, or written to in units of files. If you need to perform more detailed operations on files, you can select specific file operation commands according to the file type, such as INI files or CSV files.

INI File Reading

The INI file is also called the initialization configuration file. Most Windows system programs use this file format, which is responsible for managing the configuration information of the program. The INI file format is relatively fixed, generally composed of multiple subsections. Each subsection is composed of some configuration items, which are key-value pairs.

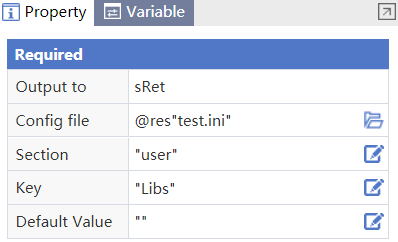

Let's look at the most classic INI file operation: "Read Key Value". In the "INI Format" directory of "File Processing" in the Command Center, select and insert a "Read Key Value" command. This command can read the value of the specified key under the specified section in the specified INI file. The command has five attributes. For the "Configuration file" attribute, fill in the path of the INI file to be read. Here it is filled with @res"test.ini", indicating that the test.ini file in the res subdirectory of the process directory has been read in. The content is as follows:

[meta]

Name = mlib

Description = Math library

Version = 1.0

[default]

Libs=defaultLibs

Cflags=defaultCflags

[user]

Libs=userLibs

Cflags=userCflags

Fill in the search range of key-value pairs for the "Section name" attribute, where "User" is filled in, to indicate that you want to search for key-value pairs in the [user] section. The "Key name" attribute fills in the name of the "Key" to be found. Here it is "Libs", indicating that you want to find the content after "Libs=". The "Default value" attribute refers to the default value returned when the key cannot be found. The "Output to" attribute fills in a string variable sRet, which will save the found key value.

Add a command to "Output debugging information" and print out sRet. After running the process, you can see that the value of sRet is "UserLibs".

CSV File Reading

The CSV file stores table data in plain text, and each line of the file is a data record. Each data record consists of one or more fields, separated by commas. CSV is widely used to exchange data table information between applications of different architectures to solve the interoperability problem of incompatible data formats.

In Laiye RPA, you can use the "Open CSV file" command to read the contents of the CSV file into a data table to better process the data. For the processing method of the data table, see the next section.

First look at the "Open CSV file" command, this command has two attributes. The "File path" attribute fills in the path of the CSV file to be read. Here it is filled with @res"test.csv", indicating that the process has been read and that the test.csv file is in the res subdirectory of the directory. Fill in a data table object objDataTable in the "output to" attribute. After running the command, the content of the test.csv file will be read into the data table object objDataTable. We can add an "Output Debug Information" command to view the contents of the objDataTable object.

Let's look at the "Save CSV file" command again. This command also has two attributes. The "Data Table Object" attribute fills in the data table object objDataTable obtained in the previous step, and the "File Path" attribute fills in the path to save the CSV file. Here it contains @ res"test2.csv", indicating that the data in the objDataTable data table object will be saved to the test2.csv file in the res subdirectory of the process directory.

Data Processing Method

After the data is read, the data must be processed. For different data formats, Laiye RPA provides different data processing methods and commands, including general data tables, strings, collections, arrays, time, proprietary JSON, and regular expressions. The following describes these data processing methods.

Data Table

The data table is a two-dimensional data table that uses memory space to store and process data. Compared with the files stored on the hard disk, the advantage of memory is that the data processing speed is tens or hundreds of times faster, but the memory space is relatively small. Therefore, the general processing flow is: 1. Read the data to be processed into the memory and store it in the form of a data table; 2. Process the data table in the memory; 3. After the processing is completed, transfer the data to the hard disk again; 4. Process the next batch of data. In this way, the data processing speed can be greatly accelerated without being limited by the data space.

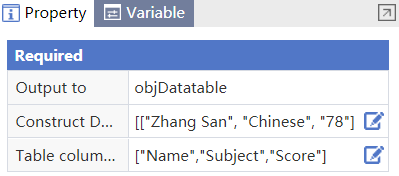

First look at how to build a data table. In the "Data Table" directory of the "File Processing" in the command center, select and insert a "Build Data Table" command. This command can generate a data table from the table header and the construction data. The command has three attributes. The "Table column header" attribute fills in the table header of the data table; here it contains ["Name", "Subject", "Score"]. To construct the "data attribute", fill in the data in the data table, here it is filled in with [["Zhang San", "Chinese", "78"],["Zhang San", "English", "81"] ,["Zhang San", "Math", "75"],["Li Si", "Chinese", "88"],["Li Si", "English", "84"],["Li Si", "Math", "65"]].

In this way, the data table is constructed and stored in the variable objDatatable filled in the "Output to" attribute, as shown below:

| Name | Subject | Marks |

|---|---|---|

| Zhang San | Chinese | 78 |

| Zhang San | English | 81 |

| Zhang San | Math | 75 |

| Li Si | Chinese | 88 |

| Li Si | English | 84 |

| Li Si | Math | 65 |

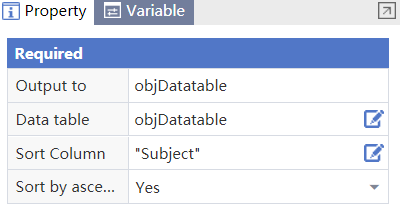

After the data table is constructed, various data operations such as reading, sorting, and filtering can be performed based on the data table. First look at the sorting operation of the data. The "Data table sorting" command has four attributes. The "Data table" attribute fills in the data table to be sorted, and here it contains data table object objDatatable obtained in the previous step. The "Column sorting" attribute indicates which column to sort, and here it is filled in with "Subject". The "Ascending sorting" attribute refers to the sorting method: "Yes" means ascending order, "No" means descending order.

The "Output to" attribute fills in the sorted data table object, here it is still filled with objDatatable. Use the "Output Debugging Information" command to view the sorted data table as follows:

| Serial number | Name | Subject | Marks |

|---|---|---|---|

| 2 | Zhang San | Chinese | 78 |

| 5 | Zhang San | English | 81 |

| 1 | Zhang San | Math | 75 |

| 4 | Li Si | Chinese | 88 |

| 0 | Li Si | English | 84 |

| 3 | Li Si | Math | 65 |

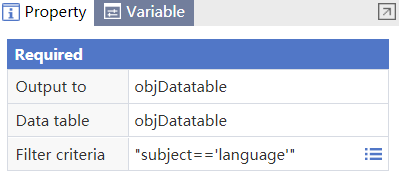

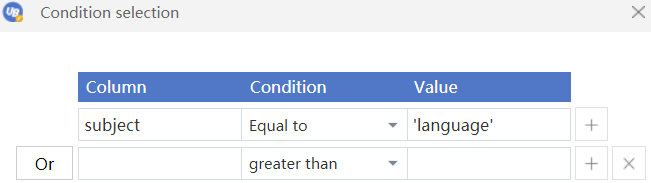

Let's look at the data screening. The "Data Filtering" command has four attributes. The "Data Table" attribute fills in the data table to be filtered, and here it contains the data table object objDatatable obtained in the previous step. The "Filter Criteria" attribute refers to the data that meets the criteria. Click the "More" button on the right side of the property bar, and the "Filter Criteria" input box will pop up. The filtering conditions include a combination of "column", "criteria", and "value", such as "subject== 'language'", which means that all the subject data is idiomatic. We can add screening conditions, and the relationship between multiple screening conditions is "and" or "or".

Use the "Output Debugging Information" command to view the filtered data table as follows:

| Serial number | Name | Subject | Marks |

|---|---|---|---|

| 0 | Zhang San | Chinese | 78 |

| 3 | Li Si | Chinese | 88 |

JSON

JSON is a lightweight data exchange format for storing and exchanging text information. JSON is easy for humans to read and write and for machines to parse and generate. JSON is similar to XML in usage, but smaller, faster, and easier to parse than XML.

There are two JSON commands in Laiye RPA. One is "Convert JSON string to data" and the other is "Convert data to JSON string". The data here actually refers to data in dictionary format. In other words, JSON objects are equivalent to dictionary formats. Conversion between JSON strings and JSON objects, plus some dictionary operations, can complete all data processing operations in JSON format.

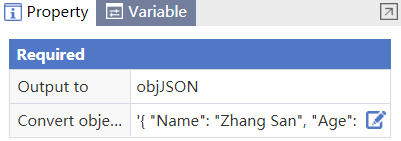

Let's first look at the "JSON string to data" command. This command can convert a JSON string into a JSON object. The command has two attributes: "Convert object" attribute, fill in the JSON string. Here is filled in '{ "Name": "Zhang San", "Age": "26"}'. It is important to note that in the past, when filling in a string, the default was to use double quotes "" as the start and end symbols, but here single quotes '' are the default start and end symbols. This is because the JSON string is the attribute, and the "key name" of the JSON string is double quotation marks "" as the start and end symbols, and the whole JSON string uses single quotation marks as the start and end symbols;

The "Output to" attribute is the converted JSON object, and here contains objJSON. Use the "Output Debugging Information" command to print the JSON object, the output result: {"Name": "Zhang San", "Age": "26" }. You may be confused, since it seems that there is no difference between the JSON string and the JSON object. Well, they are in fact very different. They look similar, but one is a string and the other is an object. Let's take a look at the operation methods of JSON objects.

Add an "Output Debugging Information" command. This command prints the value of objJSON["Name"]. The result after running is "Zhang San", indicating that the data in the JSON object can be accessed in the form of square brackets.

TracePrint (objJSON ["Name"])

Since it can be accessed, it should also be modifiable. Add an assignment statement that changes the "age" of objJSON to 30.

objJSON ["Age"]= "30"

Finally, through the "data to JSON string" command, the modified JSON object is converted to a string. This command has two attributes. The "Convert Object" attribute fills in the JSON object to be converted, which is currently the objJSON that has been used before. The "Output To" attribute fills in a string variable, which will save the converted JSON character string. Use the output debugging information command to view the converted JSON string: "{ "Name": "Zhang San", "Age": "30" }", you can see that the content of the JSON object was successfully modified.

String

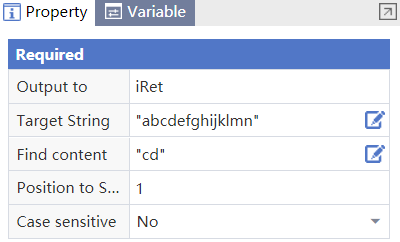

Strings are the most common data type in the system, and string operations are the most common data operations. Being proficient in string operations will greatly benefit subsequent development. Let's first look at a most classic command: "find string". This command will find whether the specified character exists in the string. The command has five attributes. The "Target string" attribute is filled in the searched string; here it is "abcdefghijklmn". The "Find content" attribute is filled in to be searched, and the specified character is filled in with "cd". The "start search position" attribute refers to the position from which the search starts; the starting position is 1. The "case sensitive" attribute refers to whether the search is case sensitive, where the default is "No". The "Output to" attribute fills in a variable iRet, which stores the character position found. Run the command and print the variable iRet. If the output is 3, it indicates that "cd" appears in the third position of "abcdefghijklmn". If the search string does not exist, it will return 0.

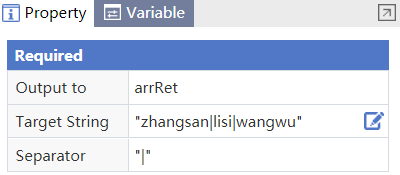

Let's look at a classic string operation: "Split Character" command. This command uses a specific separator to split the string into an array. This command can be used to process CSV format files. The command has three attributes. The "target string" attribute fills in the string to be split, and it is "zhangsan|lisi|wangwu" here. The "separator" attribute fills in the symbol used to split the string, here filled with "|". The "Output to" attribute saves the split string array to arrRet. Add the "output debugging information" command, and print the variable arrRet. The result is ["zhangsan", "lisi", "wangwu"], indicating that the string "zhangsan|lisi|wangwu" is divided into string arrays by the separator "|" "zhangsan", "lisi", "wangwu" ].

Regular Expressions

When writing a string processing process, it is often necessary to test whether a string conforms to certain specific complex rules. Regular expressions are tools used to describe these complex rules. Laiye RPA can search and test a large amount of data, which is useful for data collection and web crawlers, for example.

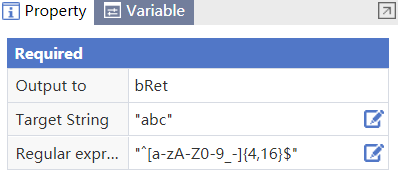

Let's first look at the "regular expression search test" command, which tries to use regular expressions to look up strings, returning true if it finds them and false if it doesn't. It is used to determine whether a string satisfies a certain condition. This command has three attributes. The "Target String" attribute fills in the character string to be tested, the "Regular Expression" attribute fills in the regular expression, and the "Output to" attribute saves the test result. For example, if a website had to determine whether a registered username is legal or not, it will first write the judgment condition of the legal username as a regular expression, and then uses the regular expression to test whether the string entered by the user meets the condition. Specifically, the "regular expression" attribute is filled with "ˆ[a-zA-Z0-9_-]{4,16}$", which means that the registration name is 4 to 16 bits, and the characters can be upper and lower case letters, numbers, underlines, and dashes. If "abc_def" is filled in the "target string", the test result would return true, indicating that "abc_def" conforms to the regular expression. If "abc" or "abcde@" is filled in the "target string", the test result would return false, because the length of "abc" is 3 and "abcde@" contains the character "@", which is not allowed under the regular expression rules.

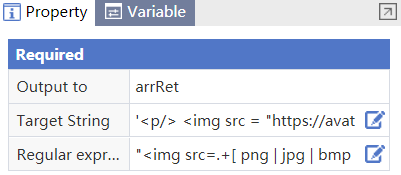

Let's look at the "regular expression search" command. This command uses regular expressions to search for strings and find all the strings that meet the conditions. The command has three attributes. The "target string" attribute fills in the string to be searched, the "regular expression" attribute fills in the regular expression, and the "output to" attribute saves the search result. For example, the "target string" attribute fills in a section of a web page that the web crawler crawls back to, as shown below:

<p/>

<img src = "https://avatar.csdn.net/A/4/C/3.jpg"/>

<p/>

<img src="https://g.csdnimg.cn/static/1x/11.png"/>

<p/>

Fill in "\<img src=.+[png | jpg | bmp | gif]" in the "regular expression" attribute. This means that it matches a string that starts with \<img src= and ends with the suffix png | jpg | bmp | gif of the image file. Through this command, all the picture links in the crawled web page can be extracted.

For a detailed tutorial on regular expressions, see the online tutorial.

Array

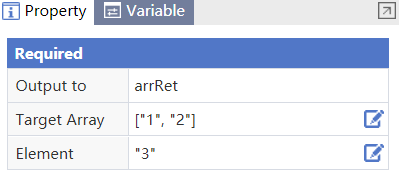

The "array" command mainly completes the functions of array editing (adding elements, deleting elements, intercepting and merging arrays), obtaining array information (length, subscript, etc.). In the "array" directory of data processing in the command center, select and insert an "add element at the end of the array" command, which adds an element at the end of the array. This command has three attributes. The "target array" attribute fills in the array before adding elements. Here, it is filled in with ["1", "2"]. The "Add element" attribute fills in the elements to be added, and currently is filled in with "3". The "Output to" attribute holds the added array variable and prints it with the expected output of ["1", "2", "3"].

Let's look at the "filter array data" command again. This command can quickly filter the elements in the array, leaving or removing the elements that meet the conditions. The command has four attributes. The "target array" attribute fills in the array to be filtered, and here it is filled with ["12", "23", "34"]. The "filter content" attribute fills the conditions to filter the array, currently filled with "2", which means that the array element meets the condition as long as it contains "2". The "retain filter text" property has two options: "Yes" means that the array element that meets the condition will be retained, excluding elements that do not meet the condition; "No" indicates that the elements of the array that meet the conditions will be removed, and the elements that do not meet the conditions are retained. The "output to" attribute holds the processed array arrRet.

If you select "Yes" to print the filtered array variable arrRet, the output result is ["12", "23"]. Array elements containing the string "2" are retained. If you select "No" to retain the filter text attribute and print the filtered array variable arrRet, the output result is ["34"]. Array elements containing the "2" string are removed.

Mathematics

The mathematical operation commands are located in the "Mathematics" directory of the "data processing" in the command center, and they include various mathematical operations. These commands are relatively independent, so only one explanation is selected here. The other commands are used in a similar manner and will not be repeated here.

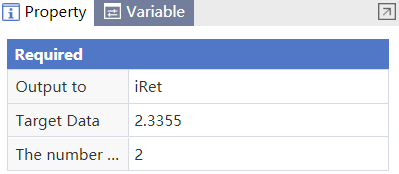

Select and insert a "round value" command, which can round the number. There are three attributes of this command. The "target data" attribute fills in the number that needs to be rounded, the "reserved decimal places" attribute fills in the number of decimal places reserved, and the "output to" attribute saves the rounded result.

Time

Time operation commands mainly include the conversion between time and character strings, and the operation of time objects. First let's look at how to get the current time. In the "time" directory of the "data processing" in the command center, select and insert a command to get the time. This command can get the number of days elapsed from January 1, 1900 to now. This command has only one "output to" attribute, which saves the current time. Here it contains "dTime". After running the process, the "output debugging information" command prints debugging information: 43771.843969907. This indicates that from January 1, 1900 to now, 43771.843969907 days have passed. You can roughly estimate whether it is correct.

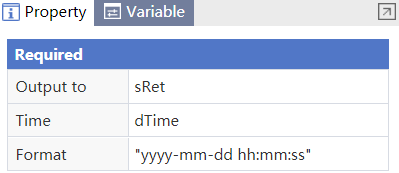

After getting the time variable, you can use the "format time" command to convert the time variable into a string of various formats. The "format time" command has three attributes. The "time" attribute fills in the time variable just obtained dTime, the "format" attribute fills in time format, where year (yyyy) occupies 4 digits, and month (mm), day (dd), Hours (hh), minutes (mm), and seconds (ss) all occupy 2 digits. For example, "yyyy-mm-dd hh:mm:ss" is converted into: "2019-11-02 20:29:58". The "Output to" attribute saves the result of formatting time.

In addition to converting time variables into strings in various formats, you can also directly obtain an item of time variables. For example, you can use the "get month" command to get the month of the time variable dTime. The other commands are similar.

Collection

Collection operation commands mainly include the creation, addition and deletion of collection elements, and operations between collections. First let's look at creating collections. In the "collection" directory of "data processing" in the command center, select and insert a "create collection" command. This command has only one "output to" attribute, and it assigns the result of creating the collection to the ObjSet object.

Next, we write elements to the ObjSet collection and insert an "add element to collection" command. This command has two properties. The "set" attribute fills in the collection object ObjSet created in the previous step, and the "add element" property fills in the collection elements, which can be constants such as numbers, strings, or variables.

Can both numeric and string elements appear in the same collection? The answer is yes! We can call the "add element to collection" command twice, inserting "1" once and "2" once. Print debugging information after running, you can see that both elements are successfully inserted into the collection.

Finally, let's look at the operations between multiple collections, taking the union of collections as an example. Two sets are constructed by inserting elements, one is {1, "2"} and the other is {"1", "2"}. Add a "take union" command. This command has three attributes. The "set" attribute and "comparison set" fill in two sets that need to be merged separately. The "output to" attribute fills in the set variables after the merge. Print the debugging information after running, you can see that the set becomes {1, "1", "2"} after the merge, which means that the union eliminates the duplicate element "2", 1 and "1" are not duplicate elements, so they are selected at the same time. Into the union, the key source code is as follows:

ObjSet=Set. Create()

Set.Add(ObjSet,1)

Set.Add(ObjSet, "2")

TracePrint (objSet)

ObjSet2=Set. Create()

Set.Add(ObjSet2, "1")

Set.Add(ObjSet2, "2")

objSetRet = Set.Union (ObjSet,ObjSet2)

TracePrint (objSetRet)