Call the large model

Command Description

Call large models to answer questions or process natural language

Command Prototype

LLM.GeneralChat({},"","","",30,0.7,4000)

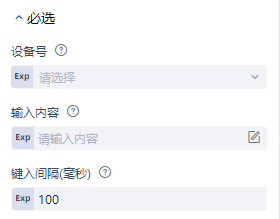

Command parameters

| parameter | mandatory | type | default value | Instructions |

|---|---|---|---|---|

| model | True | string | "" | model name |

| base_url | True | string | "" | Model Access Address |

| api_key | True | string | "" | API key |

| content | true | string | "" | user prompt |

| prompt | false | string | "" | system prompt |

| file_path | false | string | "" | Image path (JPEG/PNG/BMP, maximum 10MB, some models may not support all three formats of images) |

| timeout | false | int | 30s | Timeout (seconds) |

| temperature | false | int | zero point seven | temperature |

| max_output_length | false | int | four thousand | Maximum output length (token) |

Run instance

/***************************Input Text**********************************

Command Prototype:

LLM.GeneralChat({},"","","",30,0.7,4000)

Input Parameters:

model -- Model name

base_url -- Model access URL

api_key -- API key

content -- User prompt

prompt -- System prompt

file_path -- Image path (JPEG/PNG/BMP, max 10MB, some models may not support all three image formats)

timeout -- Timeout (seconds)

temperature -- Temperature

max_output_length -- Maximum output length (tokens)

Output Parameter:

sRet -- The result of command execution is assigned to this variable

Notes:

If the environment variable configuration file for the large model is deleted or the called large model is removed from the large model configuration management interface, the command will not function properly

*********************************************************************/

LLM.GeneralChat({"name":"deepseek","model":"openai/deepseek-chat","api_key":"$ENV.deepseek_api_key","base_url":"https://api.deepseek.com"},"Help me translate 'hello' into Chinese","","",30,0.7,4000)

Visual Example